Michael Dennis

@michaeld1729

RS @DeepMind. Works on Unsupervised Environment Design, Problem Specification, Game/Decision Theory, RL, AIS. prev @CHAI_Berkeley

ID: 1199958835508592640

https://www.michaeldennis.ai/ 28-11-2019 07:51:14

1,1K Tweet

2,2K Followers

740 Following

Today, Edward Hughes and Michael Dennis from Google DeepMind's Open-Endedness Team will be presenting "Open-Endedness is Essential for Artificial Superhuman Intelligence" as an Oral at 4:30pm in Hall C1-3.

We have a speaker change: instead of Jack Parker-Holder we'll hear from Michael Dennis - the focus of the talk is the same, though, so join if you're interested in generating any environments you can imagine!

On the eve of RL_Conference, some thoughts: 1. If we didn't have this conference, I can't imagine a workshop like Finding The Frame Workshop existing. It's important but just wouldn't fly elsewhere 2. The level of community excitement and involvement has been WILD 1/3

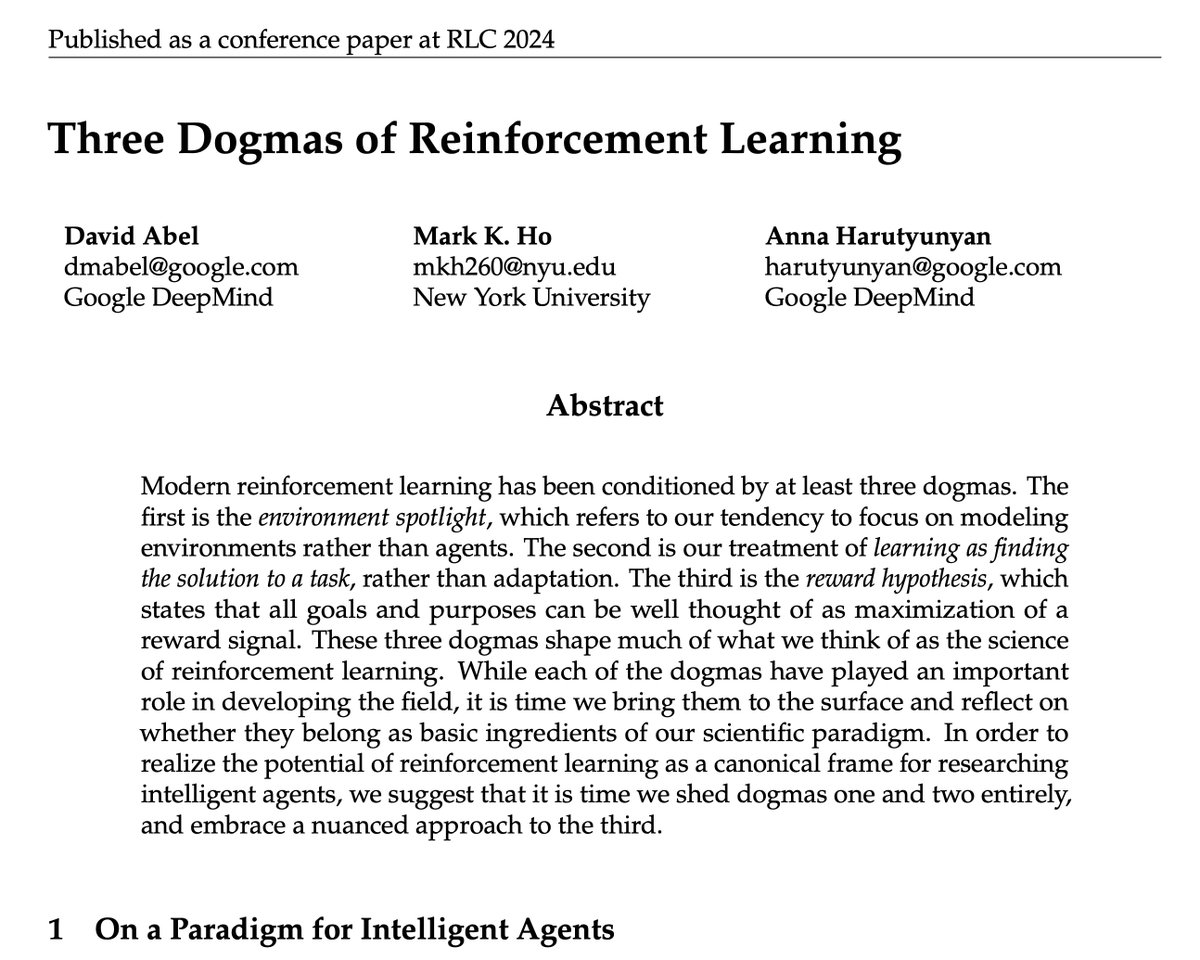

Should AI be aligned with human preferences, rewards, or utility functions? Excited to finally share a preprint that Micah Carroll Matija Franklin Hal Ashton & I have worked on for almost 2 years, arguing that AI alignment has to move beyond the preference-reward-utility nexus!