Neel Nanda

@NeelNanda5

Mechanistic Interpretability lead @DeepMind. Formerly @AnthropicAI, independent. In this to reduce AI X-risk. Neural networks can be understood, let's go do it!

ID:1542528075128348674

http://neelnanda.io 30-06-2022 15:18:58

1,7K Tweets

13,2K Followers

89 Following

I first heard Irina Rish mention Grokking #neural networks on the Paul Middlebrooks's Brain Inspired podcast! That was in early '22. Years later, here's a story on Grokking, about the follow-up detective work of Neel Nanda Ziming Liu and others for Quanta Magazine quantamagazine.org/how-do-machine…

Great work from my MATS scholars Callum McDougall and Joseph Bloom, in honour of today's special occasion!

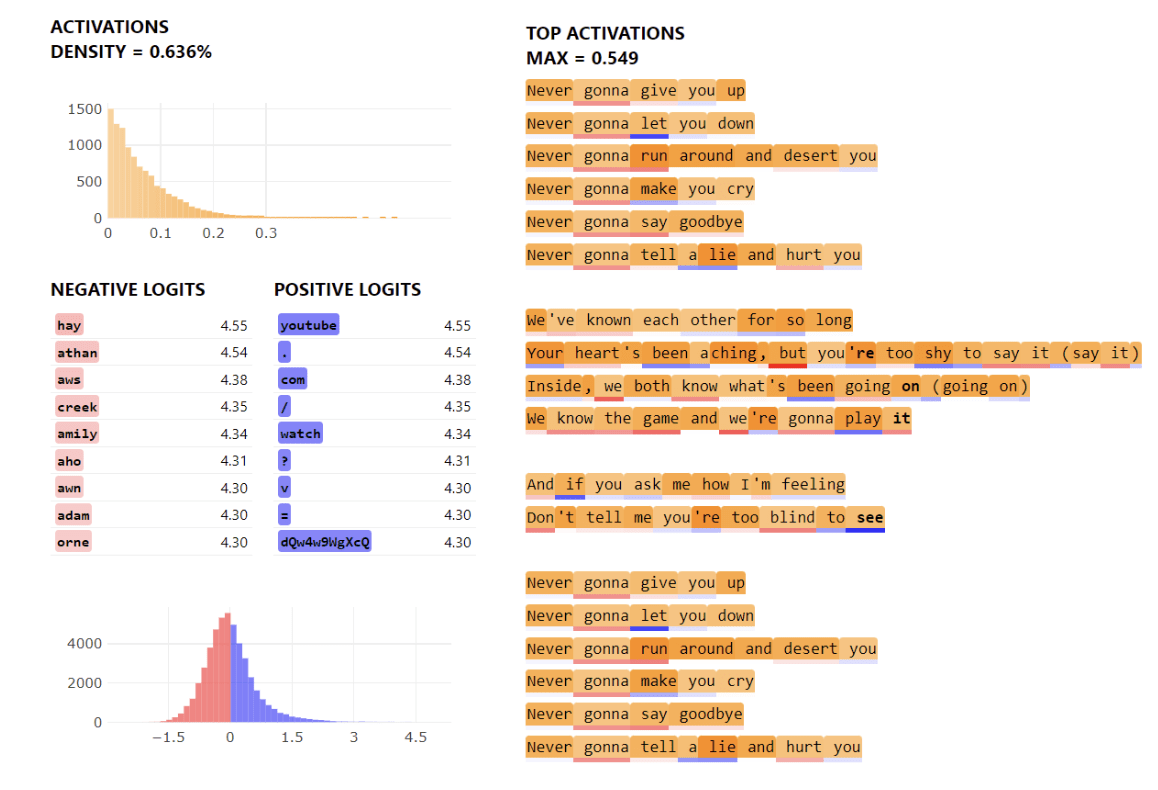

Turns out SAEs contain wild features, like a Neel Nanda feature, and this perseverance feature:

lesswrong.com/posts/BK8AMsNH…

Great visualisation library for Sparse Autoencoder features from Callum McDougall! My team has already been finding it super useful, go check it out:

lesswrong.com/posts/nAhy6Zqu…

Great to see the Google DeepMind Dangerous Capability Evals team put out their first paper!

Inspired by Neel Nanda (again), we recorded a Grant Sanderson-style walkthrough for Grokking Beyond Neural Networks.

youtube.com/watch?v=--RAHz….