Christoph Winter

@christophkw

Director @law_ai_. Law Prof @ITAM_mx. Research Associate @Harvard.

ID: 4919320696

http://www.christophwinter.net 16-02-2016 13:20:17

289 Tweet

510 Takipçi

285 Takip Edilen

Crying wolf: Warning about societal risks can be reputationally risky, the working paper by Lucius Caviola, Matt Coleman, PhD, Christoph Winter and Joshua Lewis, has been added to our Working Paper Series: globalprioritiesinstitute.org/crying-wolf-wa…

When I posted a poll that found big margins in favor of SB 1047, much discourse ensued about whether the poll was too biased to share. Anyway, here's the question used by the poll that some opponents are now sharing before the Assembly votes on whether to send it to Gavin Newsom:

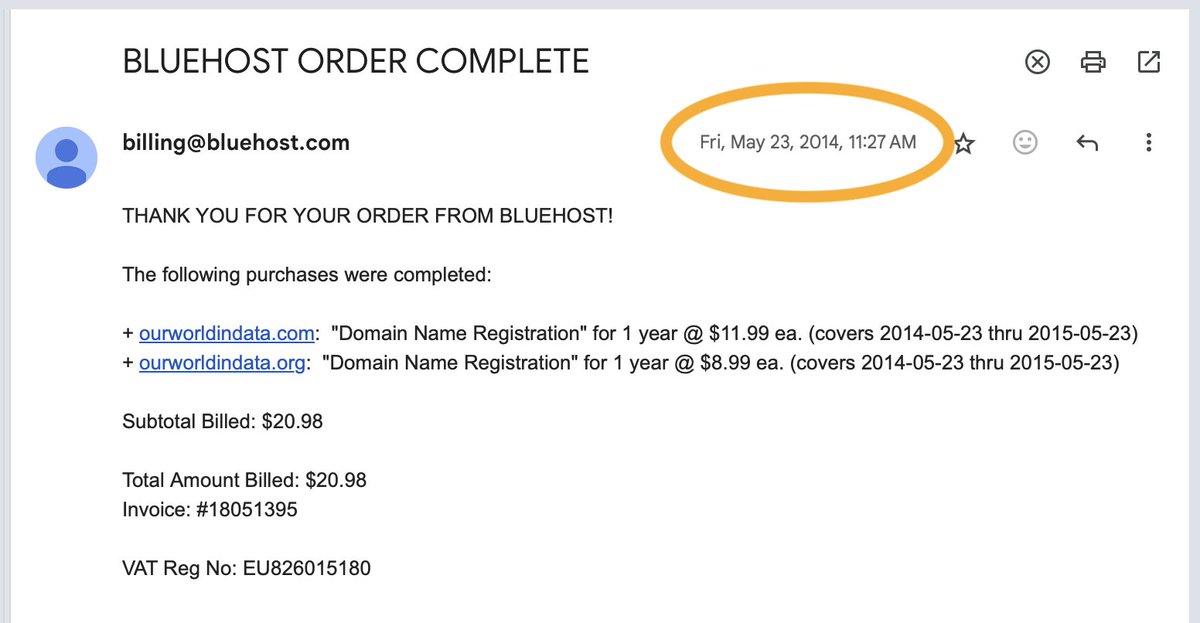

Many of us can save a child’s life, if we rely on the best data. I think this is one of the most important facts about our world, and the topic of my new Our World in Data article: ourworldindata.org/many-us-can-sa…