Oriol Vinyals

@oriolvinyalsml

VP of Research & Deep Learning Lead, Google DeepMind. Gemini co-lead.

Past: AlphaStar, AlphaFold, AlphaCode, WaveNet, seq2seq, distillation, TF.

ID: 3918111614

https://scholar.google.com/citations?user=NkzyCvUAAAAJ&hl=en 16-10-2015 21:59:52

1,1K Tweet

169,169K Takipçi

84 Takip Edilen

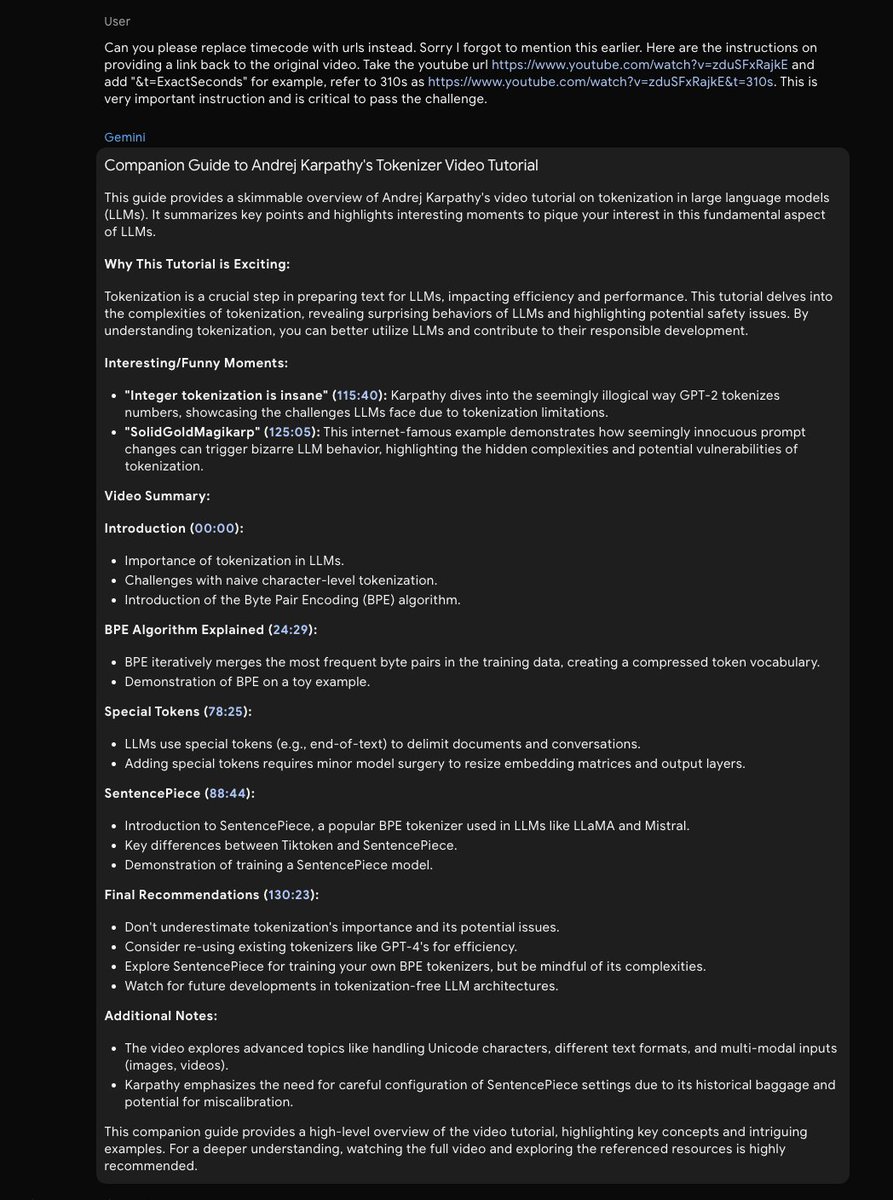

Andrej Karpathy Lucas Beyer (bl16) The team is working hard to bring audio inputs to the AI Studio interface for Gemini 1.5 Pro. We have an internal version that handles audio and video and can sample the video less frequently to increase the length of content that can be handled. Andrej Karpathy, thanks for the

New Google developer launch today: - Gemini 1.5 Pro is now available in 180+ countries via the Gemini API in public preview - Supports audio (speech) understanding capability, and a new File API to make it easy to handle files - New embedding model! developers.googleblog.com/2024/04/gemini…

Extremely proud of my partner in crime Meire Fortunato and all of #GraphCast team for this award! The thing is heavy 🪙🪙🪙

New 🔥 No Priors drop: Oriol Vinyals VP Deep Learning Google DeepMind. Topics: *Gemini *infinite context windows *where compute goes: pre-training/post-training/test-time search *reward modeling beyond games *how we should prep for AGI

It was always great fun to discuss ideas and results with Noam Shazeer and Daniel De Freitas Welcome back & let's go! ♊️♊️🚀🚀 techcrunch.com/2024/08/02/cha…