Sarah Perrin

@sarah_perrin_

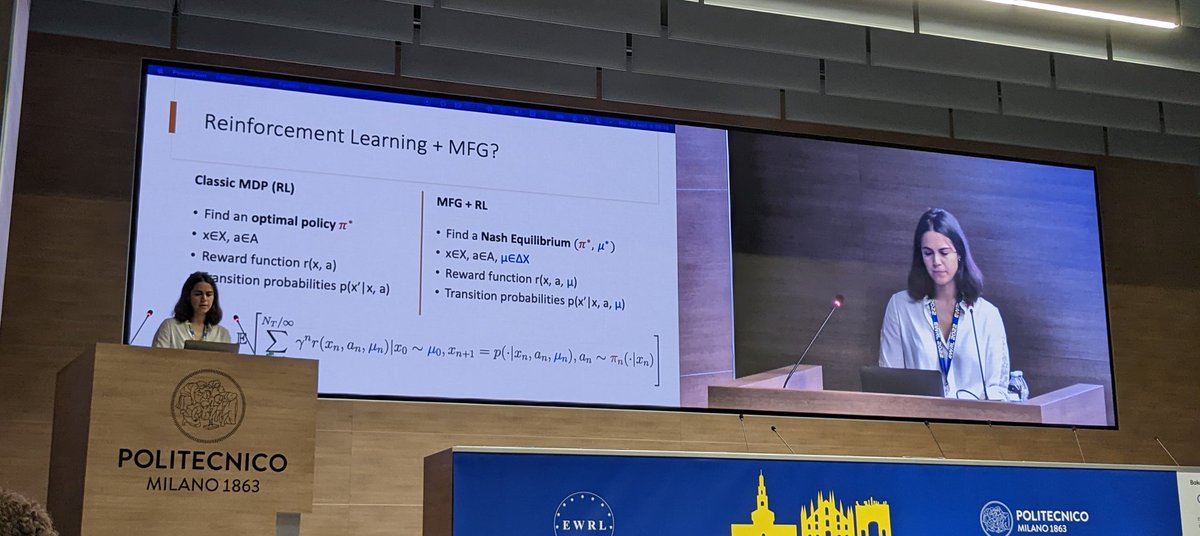

Research Scientist @GoogleDeepMind. Reinforcement Learning, Mean Field Games & Game theory

ID: 1008883085339848704

19-06-2018 01:24:06

34 Tweet

547 Takipçi

450 Takip Edilen

🏅💸💰 AWARD ALERT 💰💸🏅 The Cooperative AI Foundation (CAIF) kindly offered to give 2 awards of 500$ each for “Best Cooperative AI paper” and “Best Cooperative AI poster”! We are very grateful and waiting for your submissions 😎 cooperativeai.com/foundation

Today at 6PM CET, Sarah Perrin is going to present her work on learning master policies that leverage generalisation properties of mean-field games. A master policy leads to a Nash equilibrium irrespective of the initial distribution. Visit us at R3B for more! 🙏 #AAAI2022

First paper accepted ICML Conference! And this means... first in-person conference 🥳 --> "Scalable Deep Reinforcement Learning Algorithms for Mean Field Games" arxiv.org/abs/2203.11973

Welcome to #EWRL2022 day 2! Today's invited speakers are Sarah Perrin, Niao He, Ann Nowe and Richard Sutton.