iislucas (Lucas Dixon)

@iislucas

machines learn, graphs reason, identity is a non-identity, incompetence over conspiracy, evil by association is evil, expression is never free, stay curious

ID: 101337016

http://pair.withgoogle.com 02-01-2010 23:08:40

241 Tweet

395 Followers

202 Following

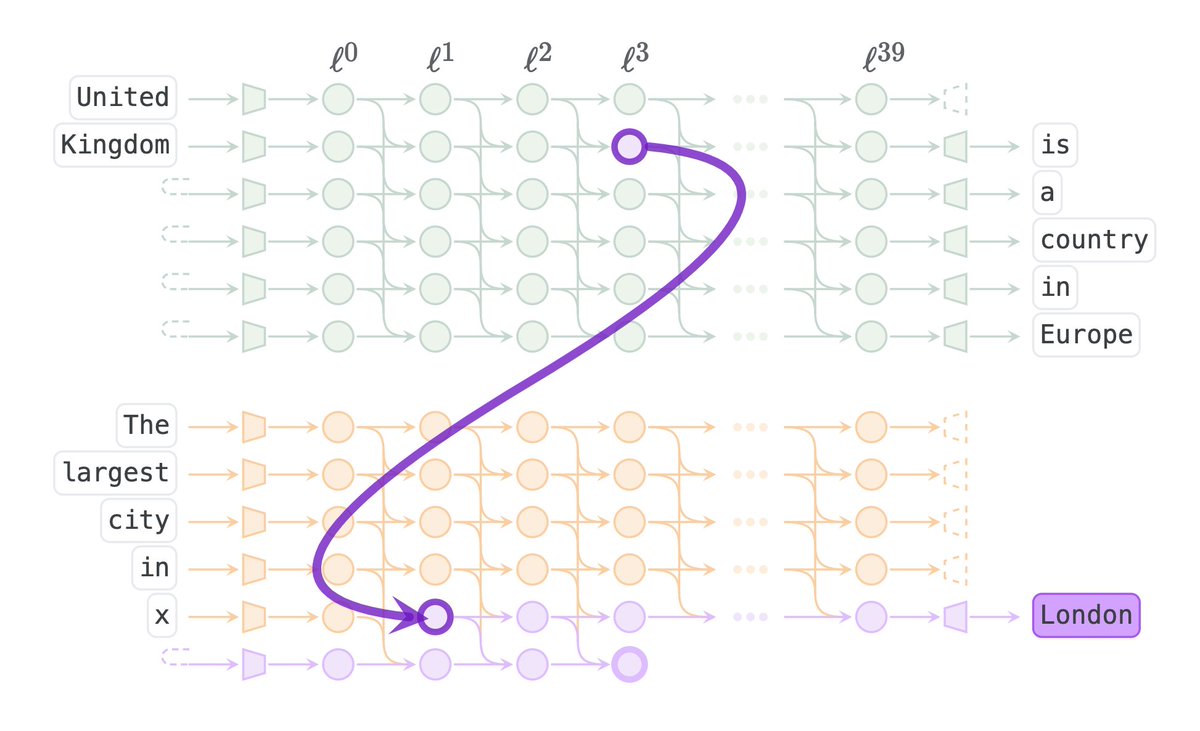

Learning Transformer Programs We designed a modified Transformer that can be trained to solve a task and then automatically converted into a discrete, human-readable program. With Alex Wettig and Danqi Chen. Paper: arxiv.org/abs/2306.01128 Code: github.com/princeton-nlp/… [1/12]

![Dan Friedman (@danfriedman0) on Twitter photo Learning Transformer Programs

We designed a modified Transformer that can be trained to solve a task and then automatically converted into a discrete, human-readable program. With <a href="/_awettig/">Alex Wettig</a> and <a href="/danqi_chen/">Danqi Chen</a>.

Paper: arxiv.org/abs/2306.01128

Code: github.com/princeton-nlp/…

[1/12] Learning Transformer Programs

We designed a modified Transformer that can be trained to solve a task and then automatically converted into a discrete, human-readable program. With <a href="/_awettig/">Alex Wettig</a> and <a href="/danqi_chen/">Danqi Chen</a>.

Paper: arxiv.org/abs/2306.01128

Code: github.com/princeton-nlp/…

[1/12]](https://pbs.twimg.com/media/Fx25Ex2XsAETaYE.jpg)

Do Machine Learning Models Memorize or Generalize? pair.withgoogle.com/explorables/gr… An interactive introduction to grokking and mechanistic interpretability w/ Asma Ghandeharioun, nada hussein, Nithum, Martin Wattenberg and iislucas (Lucas Dixon)

I love music most when it’s live, in the moment, and expressing something personal. This is why I’m psyched about the new “DJ mode” we developed for MusicFX: aitestkitchen.withgoogle.com/tools/music-fx… It’s an infinite AI jam that you control 🎛️. Try mixing your unique 🌀 of instruments, genres,

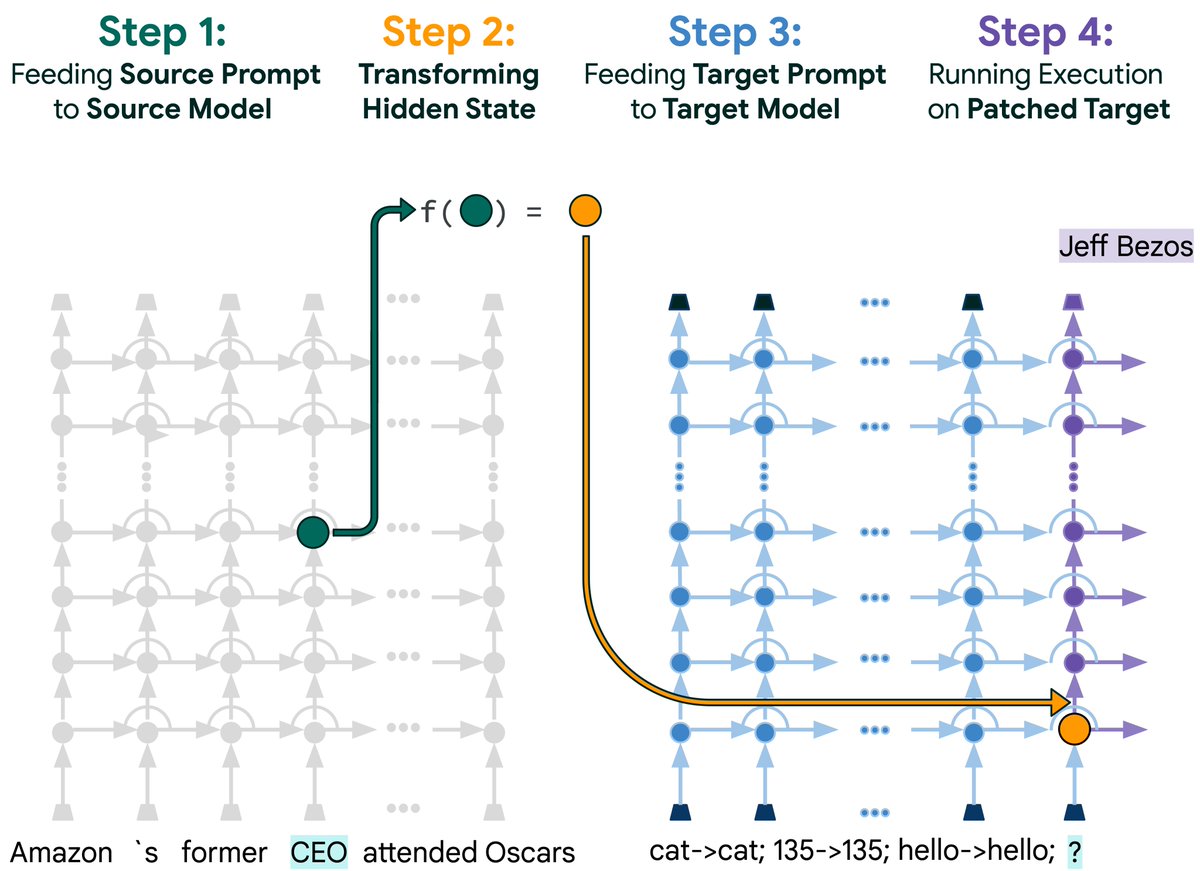

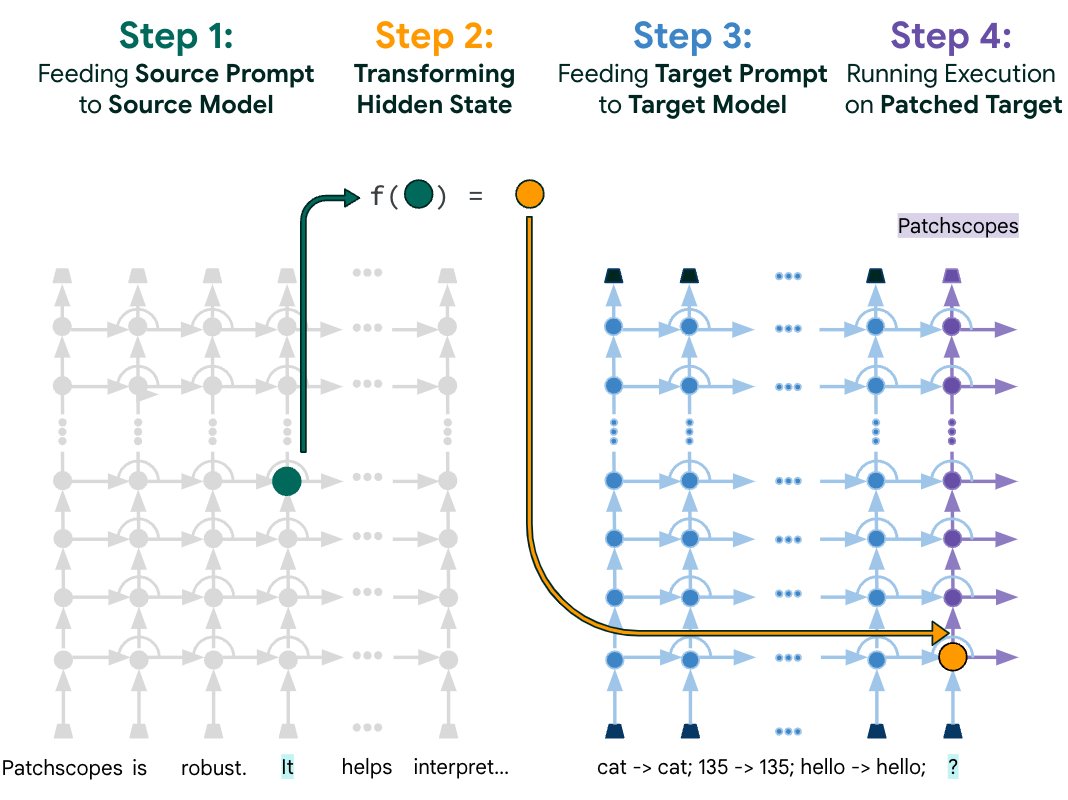

Can Large Language Models Explain Their Internal Mechanisms? pair.withgoogle.com/explorables/pa… An interactive intro to Patchscopes, an inspection framework for explaining the hidden representations of LLMs, with LLMs w/ Asma Ghandeharioun Ryan Mullins Emily Reif Jimbo Wilson Nithum iislucas (Lucas Dixon)