Jeremy R Cole

@jeremy_r_cole

Google DeepMind (NLP) | PhD from Penn State

Interested in question answering, information retrieval, cognitive/social linguistics, and beer.

ID: 1409589671194025984

https://jrc436.github.io/ 28-06-2021 19:09:07

195 Tweet

373 Followers

286 Following

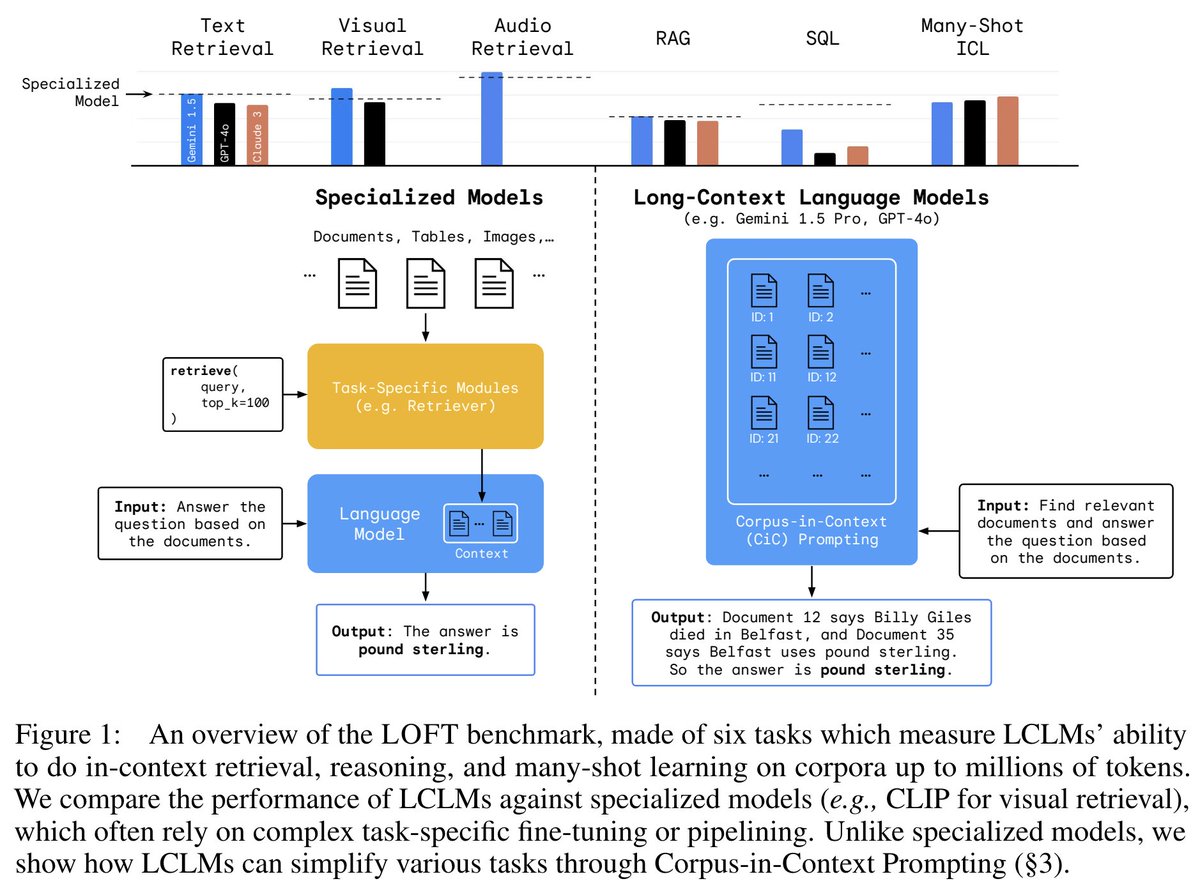

Do long-context LMs obsolete retrieval, RAG, SQL and more? Excited to share our answer! arxiv.org/abs/2406.13121 from the team at Google DeepMind that wrote one of the 1st papers on RAG (REALM) and repeat SOTA on retrieval (Promptagator, Gecko). w/ Gemini 1.5 Pro, the answer is 🧵