Gokul Swamy

@g_k_swamy

phd candidate @CMU_Robotics. ms @berkeley_ai. summers @GoogleAI, @msftresearch, @aurora_inno, @nvidia, @spacex. no model is an island.

ID: 1077849302326697985

https://gokul.dev/ 26-12-2018 08:51:13

465 Tweet

2,2K Followers

1,1K Following

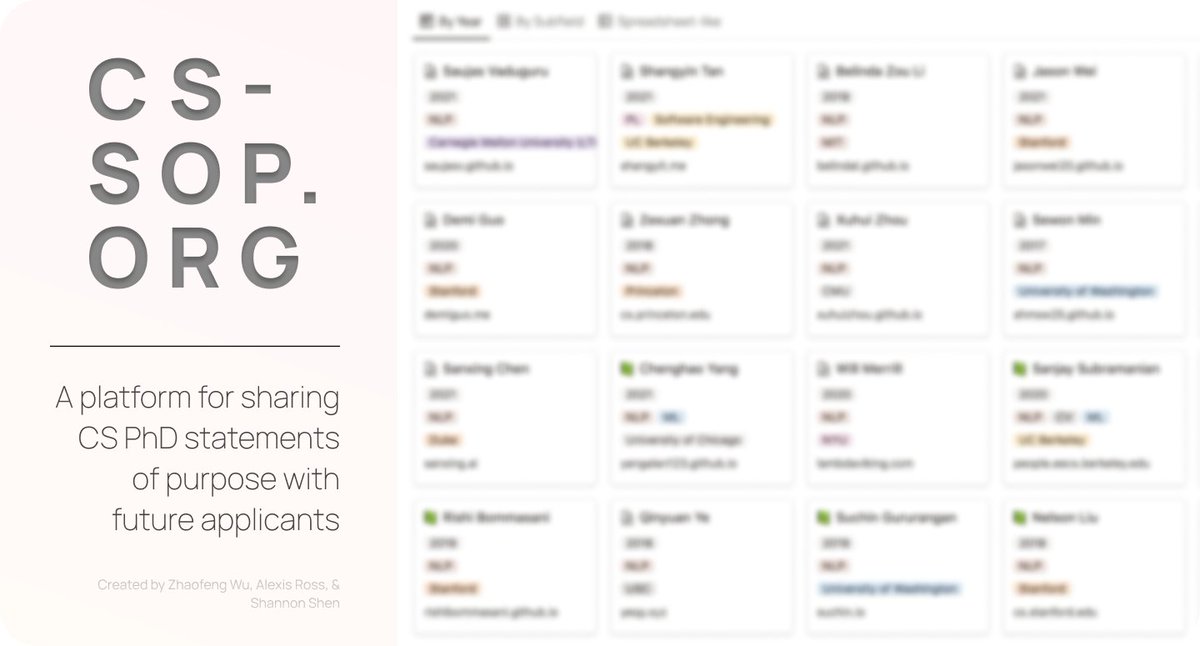

As PhD application deadlines approach, we are super excited to announce cs-sop.org, created by Zhaofeng Wu Alexis Ross @ZejiangS💻 cs-sop.org is a platform with statements of purpose generously shared by previous applicants to CS PhD programs 🧵(1/n)

I'll be at #RLC2024, helping organize the RL Beyond Rewards Workshop workshop, cheering proudly for our 2 orals at the RL Safety Workshop (rlsafetyworkshop.github.io) and perhaps posting a meme or two on RL_Conference! As usual, DM if you'd like to talk imitation, RLHF, or what's next :).

If you enjoyed / missed Jingwu Tang's talk on multi-agent IL (arxiv.org/abs/2406.04219) or Nico's talk on efficient IRL without compounding errors (rlbrew-workshop.github.io/papers/15_effi…) at #RLC2024, stop by the RL Safety / RL Beyond Rewards Workshop workshop poster sessions this afternoon to hear more!

Sriyash Poddar Yanming Wan Given latent conditional reward, optimizing policies with this is hard, due to scale ambiguity in RLHF methods. We show that methods like self-play optimization (SPO from Gokul Swamy) can help, since rewards correspond to likelihoods instead of arbitrarily scaled utilities (3/7)