Gintare Karolina Dziugaite

@gkdziugaite

Sr Research Scientist at Google DeepMind, Toronto. Member, Mila. Adjunct, McGill CS. PhD Machine Learning & MASt Applied Math (Cambridge), BSc Math (Warwick).

ID: 954436574468624384

https://gkdz.org 19-01-2018 19:33:06

79 Tweet

3,3K Followers

110 Following

We’re thrilled to announce the first competition on unlearning, as part of the #neurips competition track! Joint work w Fabian Pedregosa, Vincent Dumoulin, Gintare Karolina Dziugaite, Ioannis Mitliagkas, @KurmanjiMeghdad, Peter Triantafillou, Isabelle Guyon and others. Read our blog post!

We're excited to announce the first edition of 🔵🔴 UniReps: the Workshop on Unifying Representations in Neural Models! 🧠 To be held at NeurIPS Conference 2023! SUBMISSION DEADLINE: 4 October Check out our Call for Papers, lineup of speakers and schedule at: unireps.org

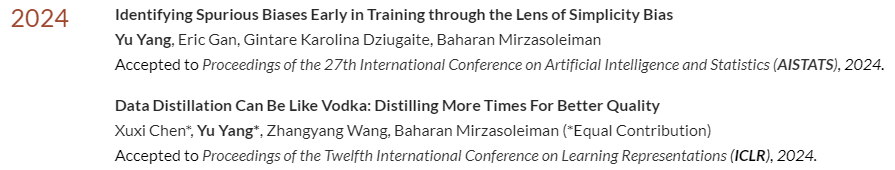

🎉 Two of my papers have been accepted this week at #ICLR2024 & #AISTATS! Big thanks and congrats to co-authors Xuxi Chen & Eric Gan, mentors Atlas Wang & Gintare Karolina Dziugaite, and especially my advisor Baharan Mirzasoleiman! 🙏 More details on both papers after the ICML deadline!

thrilled this paper was accepted to #ICML2024 , looking forward to chatting about this with many of you in vienna! camera-ready version available at arxiv.org/abs/2402.08609 w/ Johan S. Obando 👍🏽 Ghada Sokar Timon Willi Clare Lyle Jesse Farebrother Jakob Foerster Gintare Karolina Dziugaite & doina precup

Excited to present our spotlight paper on MoEs in RL today at #ICML2024! Me, Johan S. Obando 👍🏽, Pablo Samuel Castro, and Jesse Farebrother are looking forward to chat with you! Poster #1207 Hall C 4-9 at 1:30-3:00 pm