Hongli Zhan ✈️@COLM’24

@honglizhan

PhD Student 🤘@UTAustin | ex- @IBMResearch @sjtu1896 | NLP, emotions, affective computing

ID: 1256575491634458624

http://honglizhan.github.io/ 02-05-2020 13:25:44

64 Tweet

565 Followers

863 Following

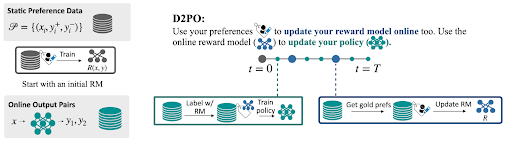

Labeling preferences online for LLM alignment improves DPO vs using static prefs. We show we can use online prefs to train a reward model and label *even more* preferences to train the LLM. D2PO: discriminator-guided DPO Work w/ Nathan Lambert Scott Niekum Tanya Goyal Greg Durrett

I like the potential of LLMs to deliver specific functions, given the right training. Hongli Zhan ✈️@COLM’24 Desmond Ong et al have trained a model to help people think about their problems from alternative perspectives. Excited to see where this goes arxiv.org/abs/2404.01288

🥳Happy to share that our paper "Evaluating Short-Term Temporal Fluctuations of Social Biases in Social Media Data and Masked Language Models" has been accepted at #EMNLP2024 Congrats to my amazing co-authors: Jose Camacho-Collados and Prof. Danushka Bollegala 📜arxiv.org/pdf/2406.13556

![fly51fly (@fly51fly) on Twitter photo [CL] Large Language Models Produce Responses Perceived to be Empathic

Y K Lee, J Suh, H Zhan… [Microsoft Research & The University of Texas at Austin] (2024)

arxiv.org/abs/2403.18148

- Large Language Models (LLMs) like chatGPT have shown surprising ability to write supportive [CL] Large Language Models Produce Responses Perceived to be Empathic

Y K Lee, J Suh, H Zhan… [Microsoft Research & The University of Texas at Austin] (2024)

arxiv.org/abs/2403.18148

- Large Language Models (LLMs) like chatGPT have shown surprising ability to write supportive](https://pbs.twimg.com/media/GJxFX3QX0AEerIU.jpg)

![Venkat (@_venkatasg) on Twitter photo What differentiates in-group speech from out-group speech? I've been pondering this question for most of my PhD, and the final chapter of my dissertation tackles this question in a super interesting domain: comments from NFL🏈 team subreddits on live game threads. 🧵[1/7] What differentiates in-group speech from out-group speech? I've been pondering this question for most of my PhD, and the final chapter of my dissertation tackles this question in a super interesting domain: comments from NFL🏈 team subreddits on live game threads. 🧵[1/7]](https://pbs.twimg.com/media/GRGJJpFWQAILYvL.jpg)