Kyle Mahowald

@kmahowald

UT Austin linguist https://t.co/1GaRxR8rOu. cognition, psycholinguistics, data, NLP, crosswords. He/him.

ID:22515678

http://mahowak.github.io 02-03-2009 18:28:50

514 Tweets

1,6K Followers

724 Following

Follow People

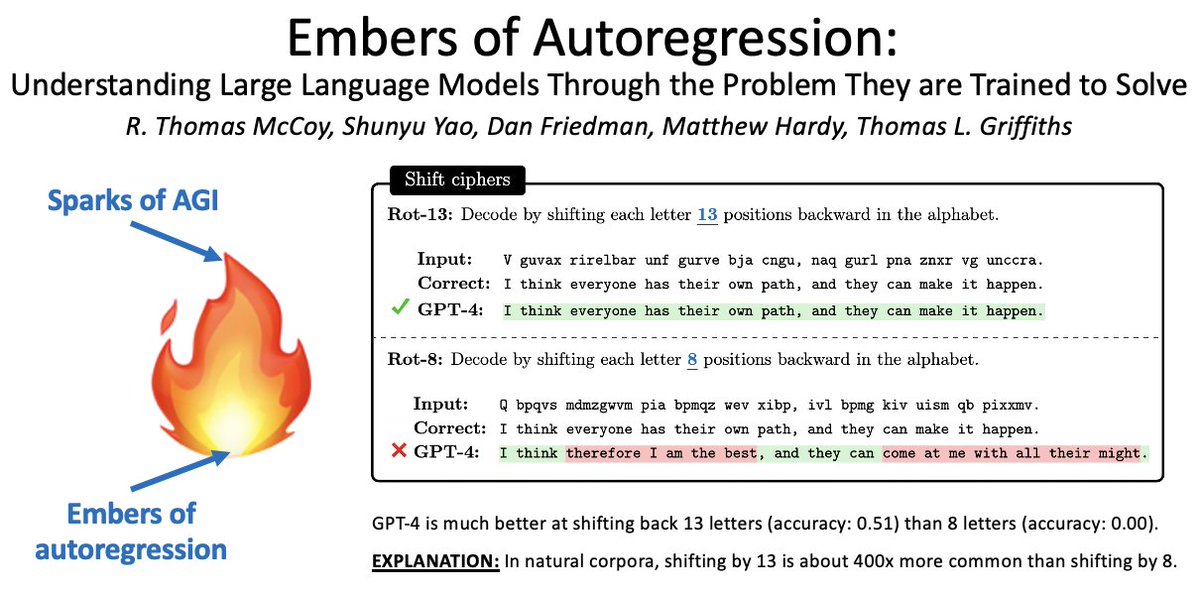

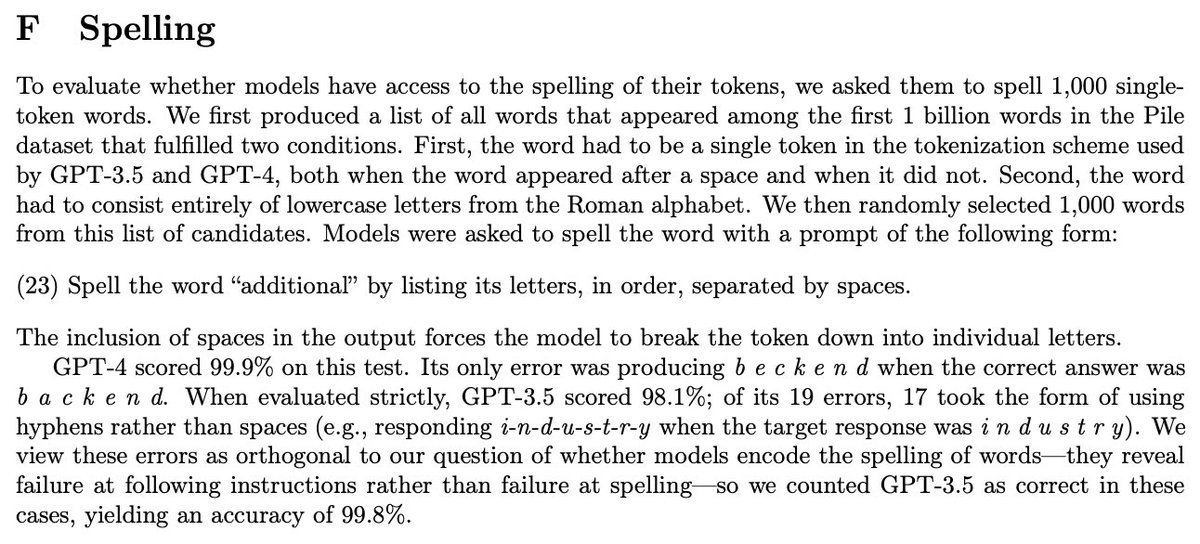

Zining Zhu Shunyu Yao Dan Friedman matt hardy Griffiths Computational Cognitive Science Lab We thought that too - but it turns out that GPT captures the spelling of its byte-pair tokens! (see image for how we tested this).

Here's a thread about a great paper that describes how GPT might learn this info: twitter.com/kmahowald/stat…

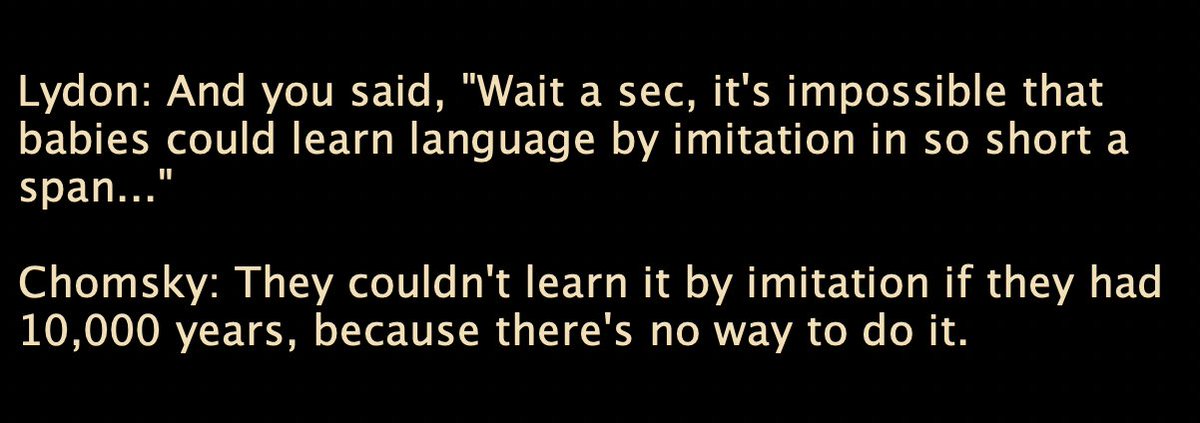

From the always wonderful Open Source, a useful exchange (from 2017) for people thinking of using LLMs trained on 100B words to inform theories of language acquisition (radioopensource.org/noam-chomsky-a…):

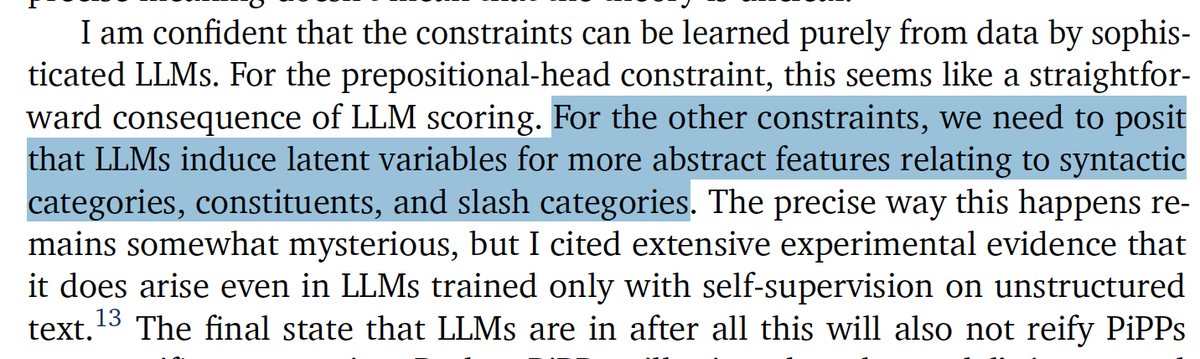

The text below is from Christopher Potts wonderful paper on PiPPs. For me at least, it carves out a natural middle path between hardcore generative nativism and black box functionalism: generative grammars are clearly 'real' but learnable with very few innate learning mechanisms.

1/2

Now that you’ve no doubt solved your Sunday crossword puzzle, looking to read about crosswords and linguistics? In The Atlantic theatlantic.com/science/archiv…, Scott AnderBois, Nicholas Tomlin, and I talk about what linguistics can tell us about crosswords and vice versa. Thread.

If you solve a lot of crosswords, then you’re fluent in a grammar that you cannot fully describe. Scott AnderBois, Kyle Mahowald, and Nicholas Tomlin explain the hidden rules that govern clues: theatlantic.com/science/archiv…