Lili Yu

@liliyu_lili

AI Research Scientist @ Meta AI (FAIR)

ID: 2994229563

23-01-2015 15:07:11

58 Tweet

1,1K Followers

198 Following

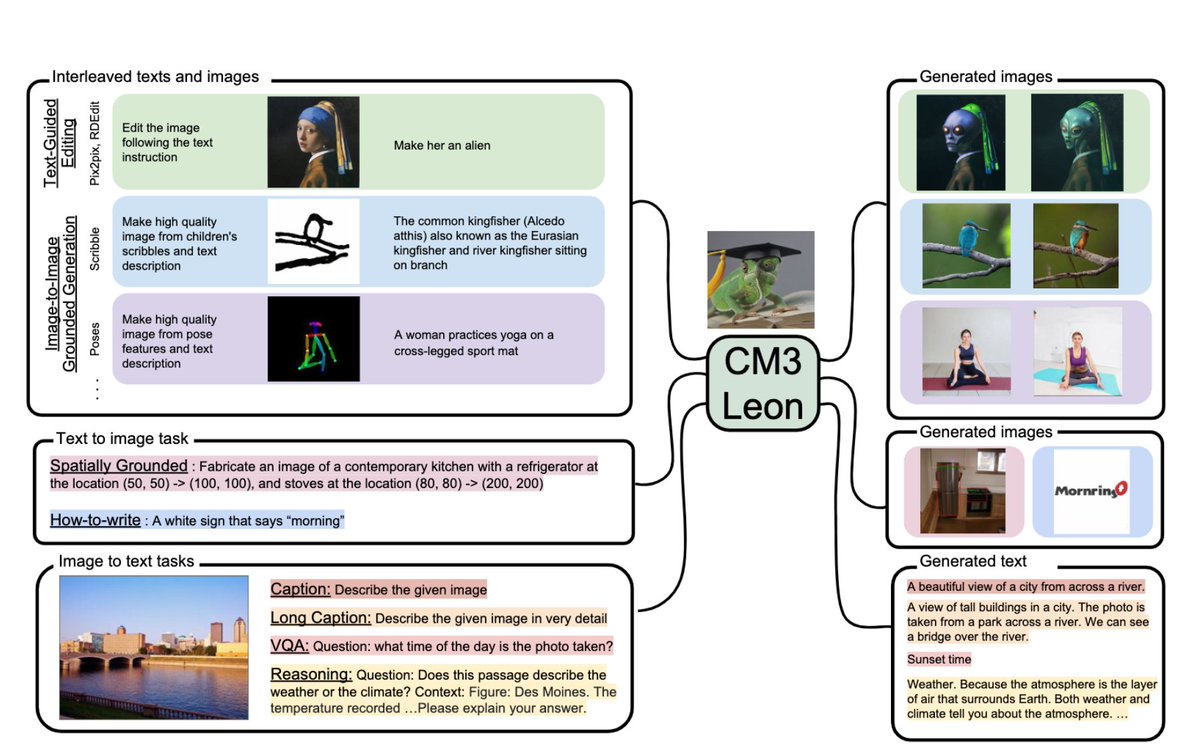

Excited to release our work from last year showcasing a stable training recipe for fully token-based multi-modal early-fusion auto-regressive models! arxiv.org/abs/2405.09818 Huge shout out to Armen Aghajanyan Ramakanth Luke Zettlemoyer Gargi Ghosh and other co-authors. (1/n)

We found that the uncontrolled growth of output norms is an early indicator of future training divergence. More shout-outs: Victoria X Lin Chunting Zhou Lili Yu (4/n)

The team is working very hard to make this happen. Armen Aghajanyan Srini Iyer Gargi Ghosh Luke Zettlemoyer

Extremely proud of the team who made it happen. Armen Aghajanyan Srini Iyer Luke Zettlemoyer Asli Celikyilmaz Victoria X Lin Scott Yih Lili Yu and many others!

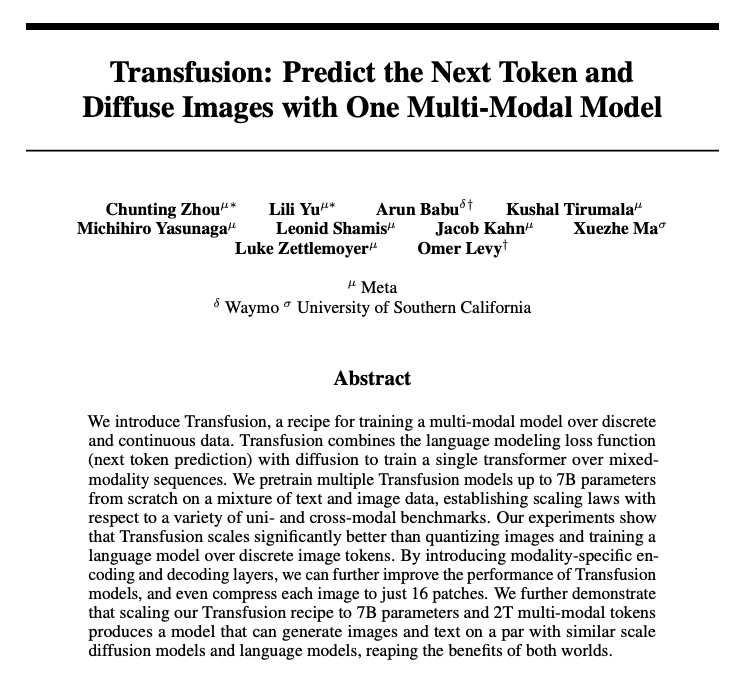

🚀 Excited to share our latest work: Transfusion! A new multi-modal generative training combining language modeling and image diffusion in a single transformer! Huge shout to Chunting Zhou Omer Levy Michi Yasunaga Arun Babu Kushal Tirumala and other collaborators.