Luke Muehlhauser

@lukeprog

Open Philanthropy Senior Program Officer, AI Governance and Policy

ID: 24847747

http://lukeprog.com 17-03-2009 06:00:52

3,3K Tweet

8,8K Followers

300 Following

Nearly half of all proposed housing units were attempted to be blocked by environmental lawsuits in California, CEQA Works

Excited to share a new blog post on Planned Obsolescence (first one in a while!) by my colleague Luca Righetti 🔸! Luca says dangerous capability tests need to get *way* harder: planned-obsolescence.org/dangerous-capa…

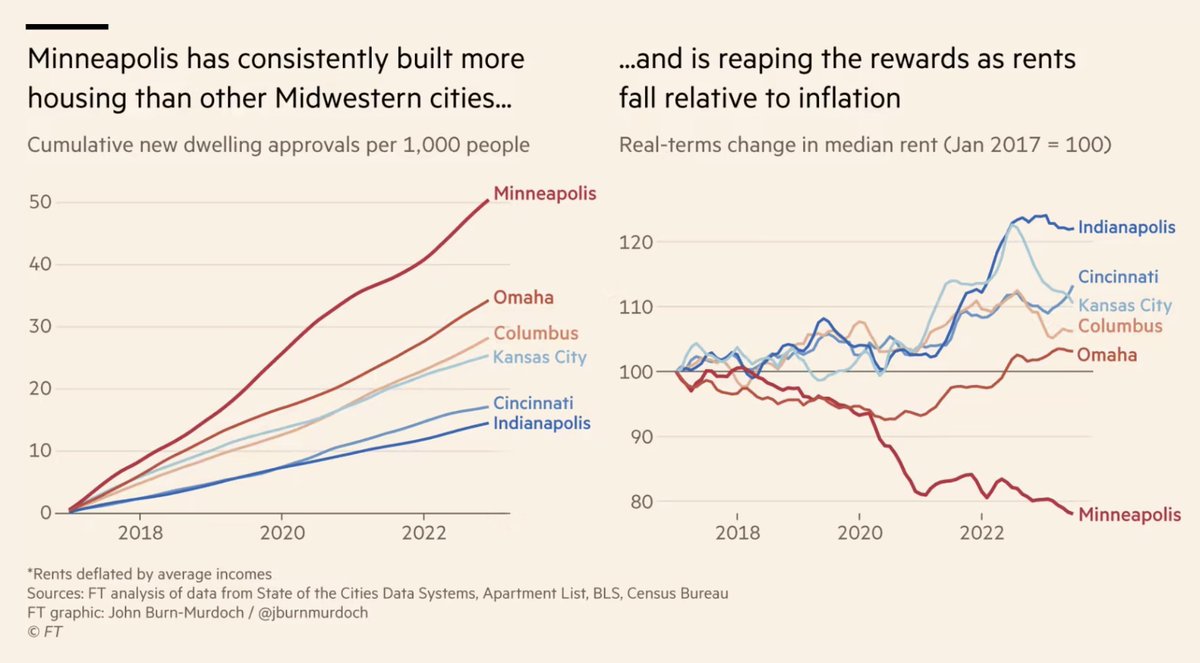

YIMBYs have been taking victory laps this week on the back of some great speeches at the DNC. Open Philanthropy has been the movement's biggest funder for most of the past decade, and I’m super proud of the progress. But we’re still very early in this fight: 1/ x.com/JerusalemDemsa…

We are hiring! Google DeepMind's Frontier Safety and Governance team is dedicated to mitigating frontier AI risks; we work closely with technical safety, policy, responsibility, security, and GDM leadership. Please encourage great people to apply! 1/ boards.greenhouse.io/deepmind/jobs/…

🛜 "If-then" commitments are the new frontier in AI safety. This emerging framework aims to mitigate AI risks without needlessly stifling tech advances. Increased interest in the framework will accelerate its progress & maturity, writes Holden Karnofsky. carnegieendowment.org/research/2024/…

The global nature of AI risks makes it necessary to recognize AI safety as an international public good, and work towards coordinated governance of these risks. Statement made in Venice: idais.ai/idais-venice/ Associated The New York Times article: nytimes.com/2024/09/16/bus…