Perry E. Metzger

@perrymetzger

Mad Scientist, Bon Vivant, and Raconteur.

ID: 127100323

28-03-2010 02:04:03

47,47K Tweet

12,12K Followers

946 Following

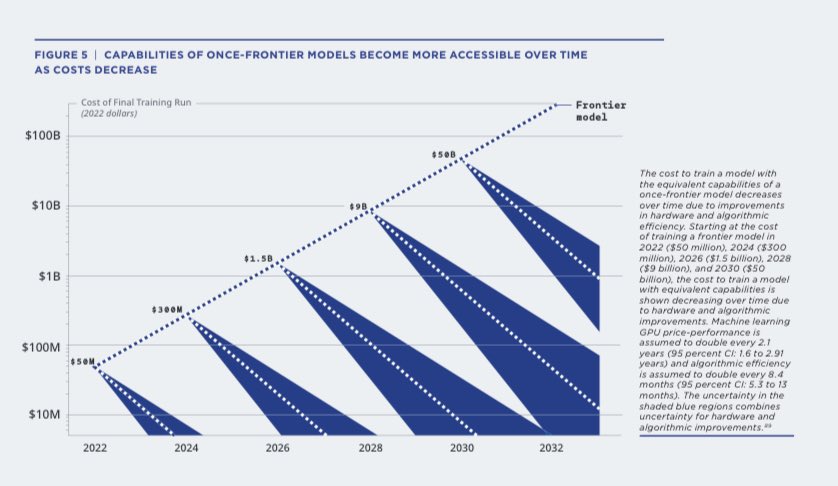

California is considering a new #AI bill (SB 1047) that is "one of the most far-reaching and potentially destructive technology measures being considered today," as I argue in this new R Street Institute analysis. rstreet.org/commentary/cal…

By the way, Daniel Jeffries is a good follow on AI doomerism, progress oriented thinking, and the like.