Philip Vollet

@philipvollet

Head of Developer Growth @weaviate_io & Open source lover tweeting about machine learning and data science projects.

ID: 421795636

https://www.linkedin.com/in/philipvollet 26-11-2011 11:40:03

17,17K Tweet

30,30K Takipçi

6,6K Takip Edilen

incredibly excited to share that i will be speaking at the flagship ai event by dotConferences in paris next month. check it out! 👨🏾🌾 dotai.io

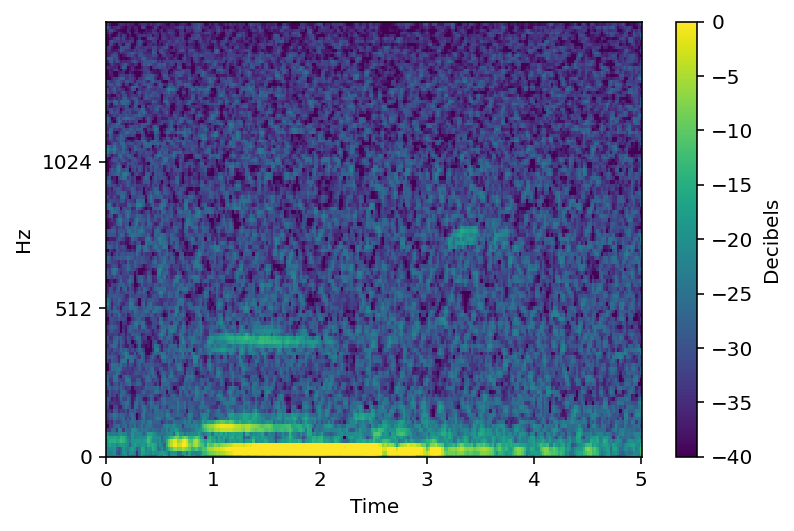

RAG 中你需要知道的 5 种分块技术 Weaviate • vector database 的文章强调了分块在 RAG 应用中的重要性。对于提高 LLM 性能至关重要,能使 RAG 应用更智能、更快速、更高效。 文中介绍了五种主要的分块技术: 01 - 固定大小分块: - 方法:将文本分割成固定大小的块,不考虑内容的自然断点或结构。 -