Ruoshi Liu

@ruoshi_liu

Building better 👁️ and 🧠 for 🤖 | PhD Student @Columbia

ID: 1370948127944015880

http://ruoshiliu.github.io 14-03-2021 04:01:29

277 Tweet

1,1K Takipçi

620 Takip Edilen

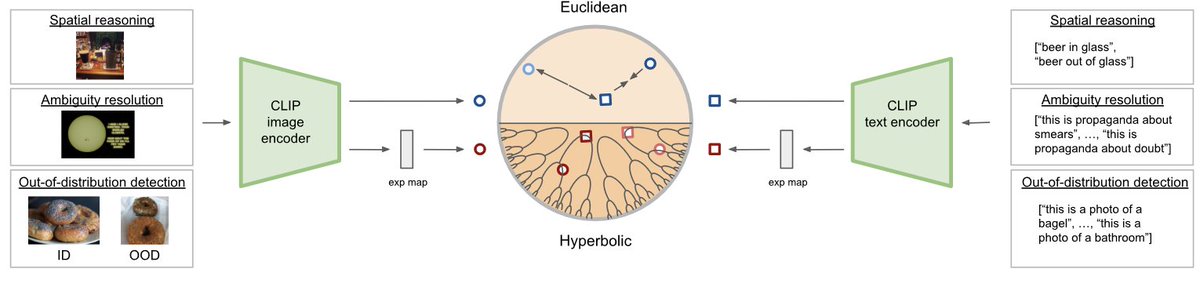

Vision-language models benefit from hyperbolic embeddings for standard tasks, but did you know that hyperbolic vision-language models also have surprising properties? Our new #TMLR paper shows 3 intriguing properties. w/ Sarah Ibrahimi Mina Ghadimi Nanne van Noord marcel worring