Thomas Wolf

@thom_wolf

Co-founder and CSO @HuggingFace - open-source and open-science

ID: 246939962

https://thomwolf.io 03-02-2011 19:33:48

3,3K Tweet

73,73K Followers

4,4K Following

Packing for a weekend I found this. It is hard to believe that BigScience Large Model Training really happened. The first time I heard of the idea my take was "this is going to be fun... but not going to work" Kudos to Thomas Wolf for the vision

Bram Hugging Face Zach Mueller github.com/huggingface/tr… We have been running the kernels extensively already so they are stable. The integration PR is merged already thanks to Zach Mueller 's team! Use `--use-liger-kernel` in the training arguments in the next release. The official integration news will

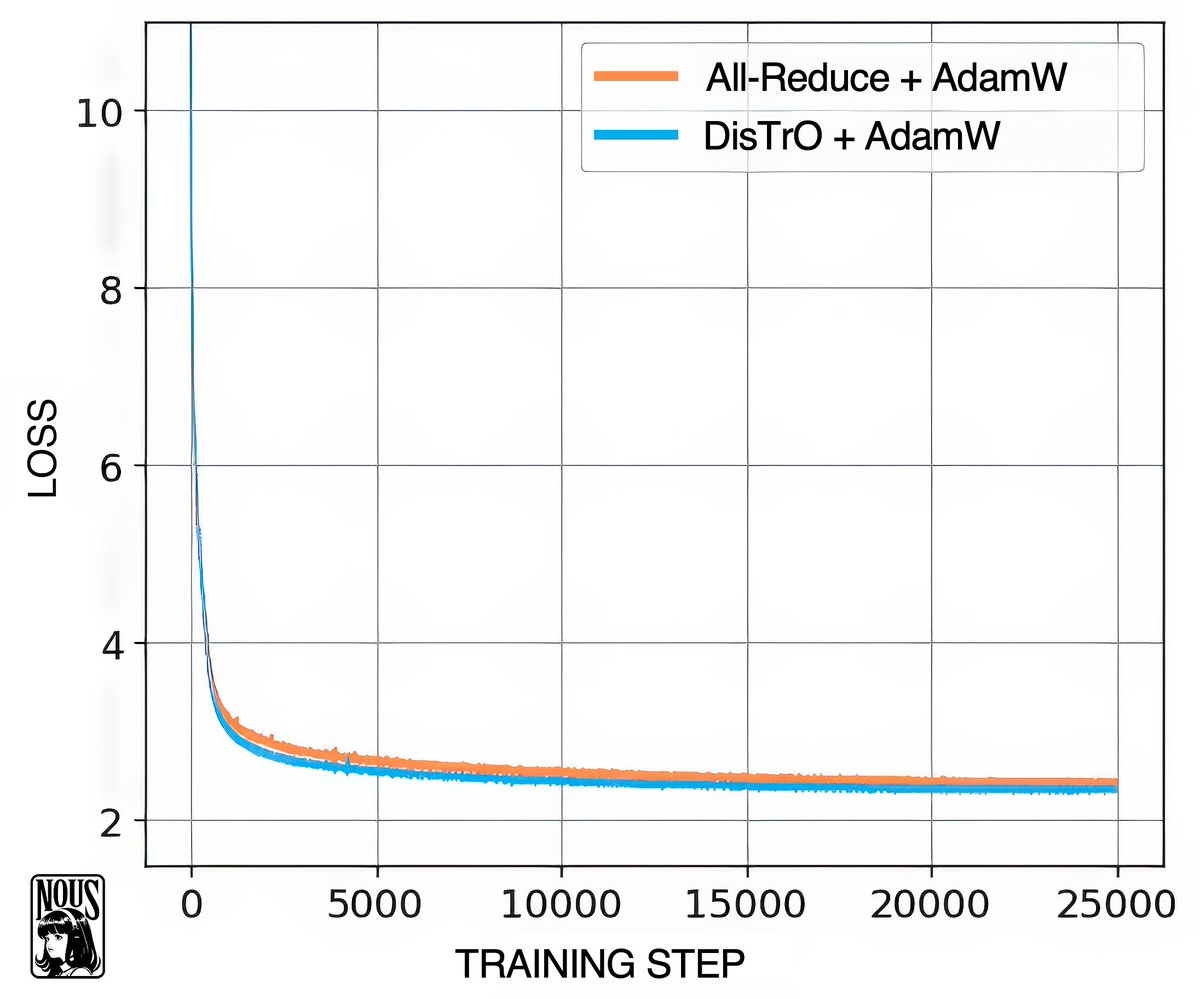

Really cool research by Nous Research reducing the number of gpu communication by 1000x to 10,000x while matching the convergence rate. Very glad they use Hugging Face nanotron library for their experiment 🔥 Congrats Bowen Peng emozilla ari 🏳️⚧️ ฅ^•ﻌ•^ฅ Umer Adil 🤗

i'm pretty sure it's the first time I see a robotics repo topping github's AI trending section huge congratulations Remi Cadene Simon Alibert Alexander Soare Marina B Michel Aractingi Haixuan Xavier Tao Jess Moss

While working on the 1.58 LLM project Hugging Face, I played around with some kernels for Int2xInt8. I wrote my first kernel in Triton where instead of unpacking the weights before performing the matrix multiplication, I fused the two operations and unpacked the weights on the

excited to join Hugging Face as an ml eng intern today! im sorry Thomas Wolf for bothering you for 8 months nonstop 😵

Our CSO Thomas Wolf on Small LLMs being a game changer and current exciting things happening at Hugging Face 🤗 techstrong.tv/videos/ecotech…