Unsloth AI

@unslothai

Open source fine-tuning of LLMs! 🦥

Github: github.com/unslothai/unsl… Discord: discord.gg/unsloth

ID: 1730159888402395136

http://unsloth.ai 30-11-2023 09:40:46

157 Tweet

5,5K Takipçi

379 Takip Edilen

🌟 Introducing PraisonAI Train 🌟 Train Llama 3.1 in just 1 line 💡 Uses Unsloth AI to Train 2x Faster & Less GPU 📈 Save in GGUF format 🎉 Save Models locally 🔗 Upload to @Ollama.com 🌐 Upload to Hugging Face All above steps done in one line command Sub:

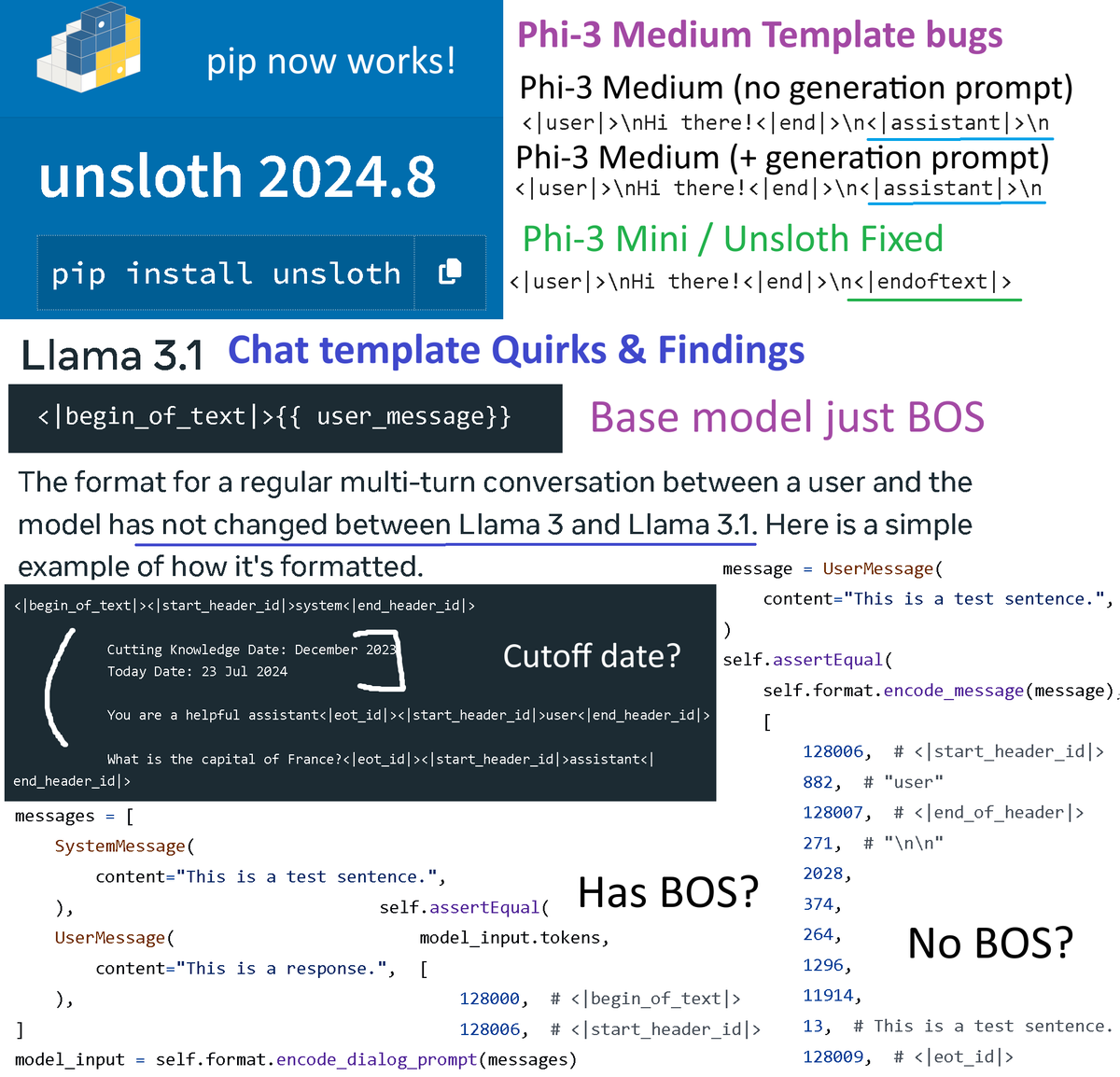

Just added Phi 3.5 fine-tuning in Unsloth AI! It's 2x faster uses 50% less VRAM and is llama-fied! 1. Llama-fication: By unfusing QKV & MLP, LoRA finetuning has a lower loss, since fused modules train only 1 A matrix for QKV, whilst unfusing trains 3 2. Long RoPE: Phi 3.5 is

Phi 3.5 Finetuning 2x faster + Llamafied for more accuracy using Unsloth AI lib (Reddit link in 1st comment)

I'll be at the PyTorch Conference on Sept 18 to talk about Triton kernels, CUDA, finetuning, hand deriving derivatives, backprop, Unsloth AI & more! Thanks to Kartikay Khandelwal for inviting me! If anyone has burning questions, lmk! Or come and say hi! Event: events.linuxfoundation.org/pytorch-confer…

🚄 Want to do continued pretraining or supervised fine-tuning even faster? We hear Unsloth AI has the right tool for the job. Join us live to investigate the concepts and code while we chat with the creators! RSVP: bit.ly/finetuningunsl…

Thank you Awni Hannun for MLX and Matt Williams for the great video! I just got this into ollama the other day. So far I've tested with both MLX and Unsloth AI and it seems to work well, but I'd love for more people to try it out!

We will be joining AI Makerspace to do a live tutorial on continued pretraining & supervised fine-tuning with Unsloth! Hope to see you all there on Sept 4! 🦥 Event: lu.ma/xd0zzk0h

Just published a few high-level notes on fine-tuning AI models. Including using Unsloth AI and other methods. becomingahacker.org/fine-tuning-ai…

Uploaded more 4bit bnb quants to huggingface.co/unsloth for 4x faster downloading! 1. Nous Research Hermes 8, 70 & 405b 2. cohere Command R 32b, R+104b 3. Ashvini Jindal Llama 3.1 Storm 4. Reuploaded Llama 3.1 405b - 50% less VRAM use for inference since KV cache was duplicated

🚀 Fine-tune 𝗟𝗹𝗮𝗺𝗮-𝟯.𝟭-𝗦𝘁𝗼𝗿𝗺-𝟴𝗕 2.1x faster and with 60% less VRAM, with no accuracy degradation! Finetuning Colab (change model path to 𝘂𝗻𝘀𝗹𝗼𝘁𝗵/𝗟𝗹𝗮𝗺𝗮-𝟯.𝟭-𝗦𝘁𝗼𝗿𝗺-𝟴𝗕-𝗯𝗻𝗯-𝟰𝗯𝗶𝘁): colab.research.google.com/drive/1Ys44kVv… Shoutout to Daniel Han and

Our talk about Continued Pretraining & Fine-tuning with AI Makerspace is out! Details: - How Unsloth was created & how it works - How to get started with Triton & CUDA - Continued Pretraining usecases + tutorial - Fine-tuning vs RAG Watch: youtube.com/watch?v=PRlzBl…

Michael and Daniel Han have been killing it since joining YC. Unsloth AI is hands down the most impressive open-source finetuning solution I’ve come across. Their potential is limitless 🚀

What we built🏗️, shipped🚢, and shared🚀 last week: Continued Pretraining and Fine-Tuning with Unsloth AI Learn about the secret sauce directly from the creator, Daniel Han ! ✍️ Manual gradient derivations 🧑💻 Custom kernels ⚡ Flash Attention 2 Recording: