Trustworthy ML Initiative (TrustML)

@trustworthy_ml

Latest research in Trustworthy ML. Organizers: @JaydeepBorkar @sbmisi @hima_lakkaraju @sarahookr Sarah Tan @chhaviyadav_ @_cagarwal @m_lemanczyk @HaohanWang

ID: 1262375165490540549

https://www.trustworthyml.org 18-05-2020 13:31:24

1,1K Tweet

6,6K Takipçi

66 Takip Edilen

Here's my last PhD paper-- “The Files are in the Computer: Copyright, Memorization, and Generative-AI Systems” James Grimmelmann & I address ambiguity over the relationship b/w copying + memorization: when a (near-)exact copy of training data can be reconstructed from a model

Our work on challenges and inconclusiveness of membership inference attacks on LLMs has been accepted to Conference on Language Modeling!! arxiv.org/abs/2402.07841 This work has instigated new directions and many conversations on MIA evaluations, I will list them here in this thread, add to it!

When talking abt personal data people share w/ OpenAI & privacy implications, I get the 'come on! people don't share that w/ ChatGPT!🫷' In our Conference on Language Modeling paper, we study disclosures, and find many concerning⚠️ cases of sensitive information sharing: tinyurl.com/ChatGPT-person…

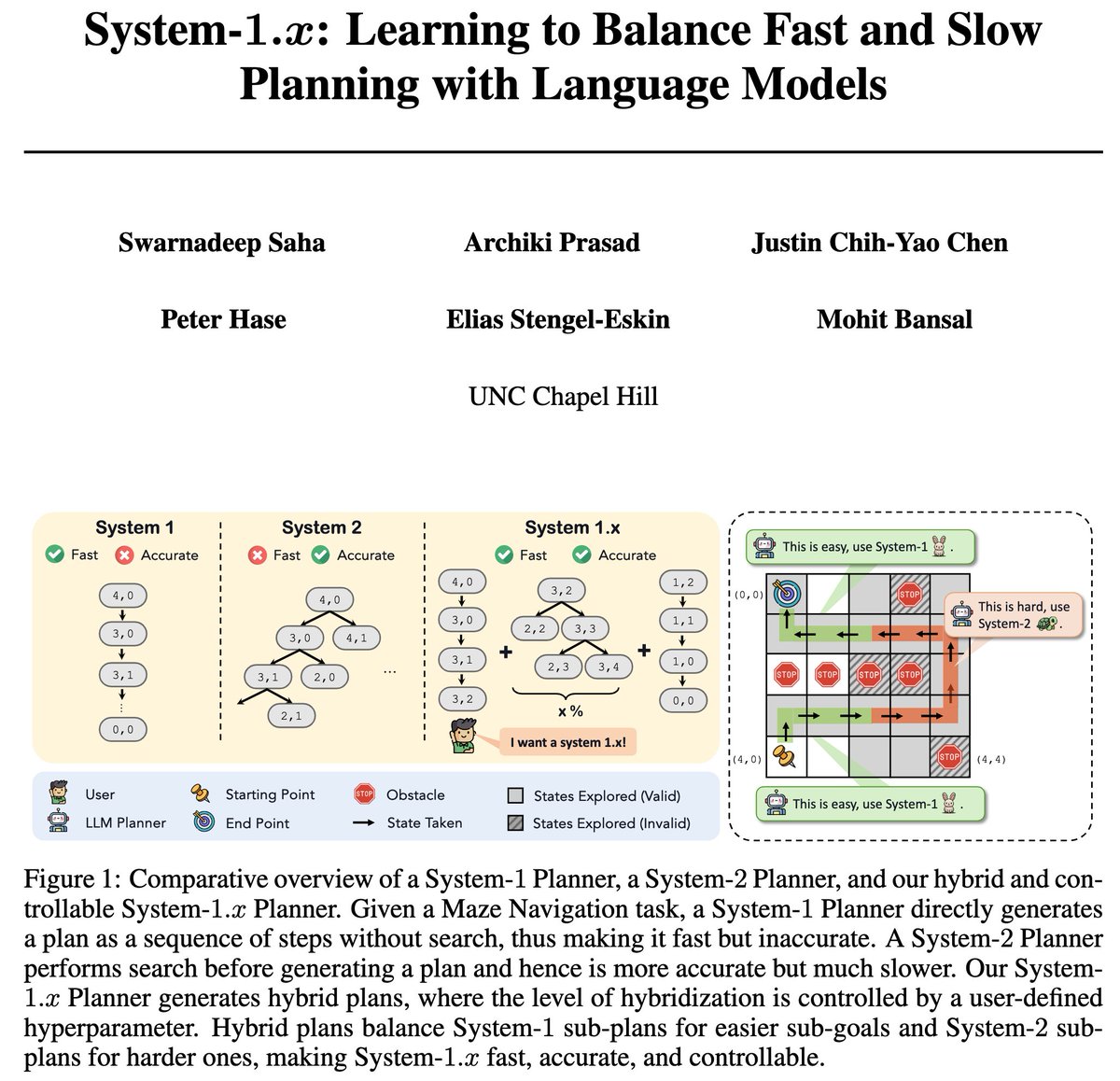

Justin Chih-Yao Chen Swarnadeep Saha Elias Stengel-Eskin Archiki Prasad ICML Conference Tianlong Chen Beidi Chen Postdoc positions: x.com/mohitban47/sta…

🚨New paper and fairness toolkit alert🚨 Announcing OxonFair: A Flexible Toolkit for Algorithmic Fairness w/Zihao Fu, Sandra Wachter - @[email protected], Brent Mittelstadt and Chris Russell toolkit -github.com/oxfordinternet… paper - papers.ssrn.com/sol3/papers.cf…

🚩(1/2) Please help forward the Call for the 2024 Adversarial Machine Learning (AdvML) Rising Star Awards! We promote junior researchers in AI safety, robustness, and security. Award events are hosted at AdvML'Frontiers workshop NeurIPS Conference 2024 Info: sites.google.com/view/advml/adv…

(2/2) #NeurIPS2024 AdvML'Frontiers Workshop advml-frontier.github.io Past Awardees: '23 Tianlong Chen Vikash Sehwag '22 Niloofar Mireshghallah Linyi Li '21 Florian Tramèr Huan Zhang Trustworthy ML Initiative (TrustML) LLM Security Award Committee: sijia.liu Cho-Jui Hsieh Bo Li & yours truly

The 3rd AdvML-Frontiers Workshop (AdvMLFrontiers advml-frontier.github.io) is set for #NeurIPS 2024 (NeurIPS Conference)! This year, we're delving into the expansion of the trustworthy AI landscape, especially in large multi-modal systems. Trustworthy ML Initiative (TrustML) LLM Security🚀 We're now

Excited to announce the 2nd edition of our Regulatable ML workshop NeurIPS Conference! We plan to debate burning questions around the regulation of generative #AI and Artificial General Intelligence (#AGI). We are accepting submissions until Aug. 30th -- regulatableml.github.io [1/N]

![𝙷𝚒𝚖𝚊 𝙻𝚊𝚔𝚔𝚊𝚛𝚊𝚓𝚞 (@hima_lakkaraju) on Twitter photo Excited to announce the 2nd edition of our Regulatable ML workshop <a href="/NeurIPSConf/">NeurIPS Conference</a>! We plan to debate burning questions around the regulation of generative #AI and Artificial General Intelligence (#AGI).

We are accepting submissions until Aug. 30th -- regulatableml.github.io [1/N] Excited to announce the 2nd edition of our Regulatable ML workshop <a href="/NeurIPSConf/">NeurIPS Conference</a>! We plan to debate burning questions around the regulation of generative #AI and Artificial General Intelligence (#AGI).

We are accepting submissions until Aug. 30th -- regulatableml.github.io [1/N]](https://pbs.twimg.com/media/GTmBepgWgAA4ofe.jpg)

📰 Excited to be organizing a workshop on Interpretability NeurIPS Conference'24, called 'Interpretable AI : Past, Present and Future' Submit to our workshop for all things inherently interpretable! Submission ddl : 30 Aug 🔗 interpretable-ai-workshop.github.io Follow this account for updates!

🚨 We are extending the Call for Papers for the 3rd IEEE Conference on Secure and Trustworthy Machine Learning (SaTML Conference)! 👉 satml.org/participate-cf… ⏰ New Deadline: Sep 27 This extension gives you more time to submit your best work on secure AI algorithms and systems😉