Collin Burns

@CollinBurns4

Superalignment @OpenAI. Formerly @berkeley_ai @Columbia. Former Rubik's Cube world record holder.

ID:1236495233996775424

http://collinpburns.com/ 08-03-2020 03:33:43

71 Tweets

11,1K Followers

276 Following

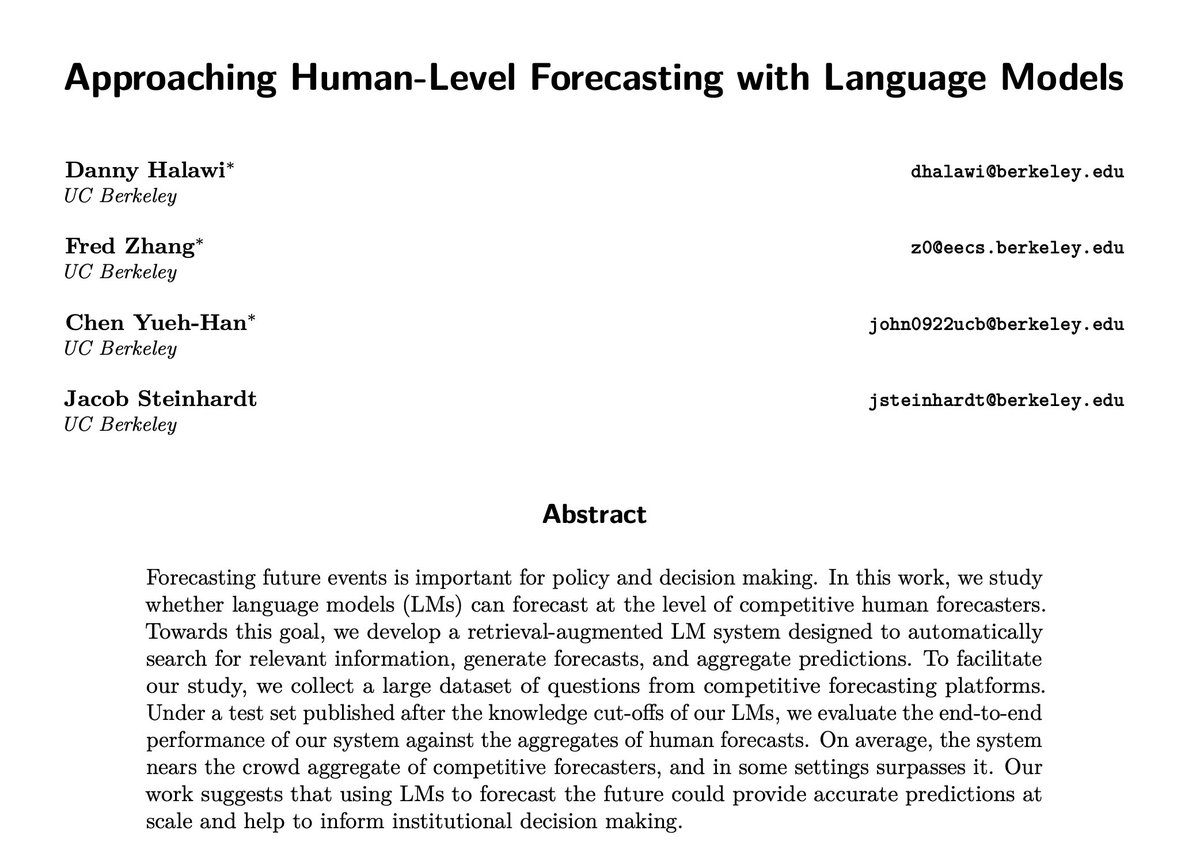

Can we build an LLM system to forecast geo-political events at the level of human forecasters?

Introducing our work Approaching Human-Level Forecasting with Language Models!

Arxiv: arxiv.org/abs/2402.18563

Joint work with Danny Halawi, Fred Zhang, and John(Yueh-Han) Chen

Aanish Nair 🟢🟡 oh that ship has sailed, sorry :D

actually one of my favorite meets at OpenAI was a cubing session with two very fast cubers, one of them a former world's record holder. I can't cube anywhere near my prior level anymore so it was a bit embarassing alongside but really fun.

So happy about this release and grateful to my awesome Preparedness team (especially Tejal Patwardhan), Policy Research, SuperAlignment and all of OpenAI for the hard work it took to get us here. It is still only a start but the work will continue!

I'm extremely impressed by Aleksander Madry Tejal Patwardhan Kevin Liu for developing this Preparedness Framework.

It's a huge step by OpenAI toward stronger AGI safety.

Excited to launch our paper on making alignment tractable with Collin Burns Pavel Izmailov

Jan Hendrik Kirchner

Bowen Baker

Leo Gao

Leopold Aschenbrenner

Adrien Ecoffet

Manas Joglekar

Jan Leike

Ilya Sutskever

Jeff Wu!