Berkeley AI Research

@berkeley_ai

We're graduate students, postdocs, faculty and scientists at the cutting edge of artificial intelligence research.

ID:891077171673931776

http://bair.berkeley.edu/ 28-07-2017 23:25:27

772 Tweets

152,2K Followers

190 Following

Follow People

Registration is now open for an exciting workshop organized by Aditi Krishnapriyan and Jennifer Listgarten at the Simons Institute for the Theory of Computing June 10th-14th in Berkeley, AI≡Science: Strengthening the Bond Between the Sciences and Artificial Intelligence. simons.berkeley.edu/workshops/aisc…

🚀 Introducing RouterBench, the first comprehensive benchmark for evaluating LLM routers! 🎉

A collaboration between Martian and Prof. Kurt Keutzer at UC Berkeley, we've created the first holistic framework to assess LLM routing systems. 🧵1/8

To read more:…

'Why don't we have better robots yet?': just posted on the TED Talks home page under Newest Talks (3rd row from the top) with links to PiE Robotics and Forbes article on art by Ben Wolff Benjamin Wolff @Berkeley_AI UC Berkeley Berkeley Engineering TED.com

New BAIR blog post on modeling extremely large images, by Ritwik Gupta 🇺🇦 Jitendra MALIK trevordarrell and more!: bair.berkeley.edu/blog/2024/03/2…

Looking to hire top AI talent?

We've compiled a list of the brilliant Berkeley AI Research Ph.D. Graduates of 2024 who are currently on the academic and industry job markets. (Thanks to our friends Stanford AI Lab for the idea!)

Check it out here:

bair.berkeley.edu/blog/2024/03/1…

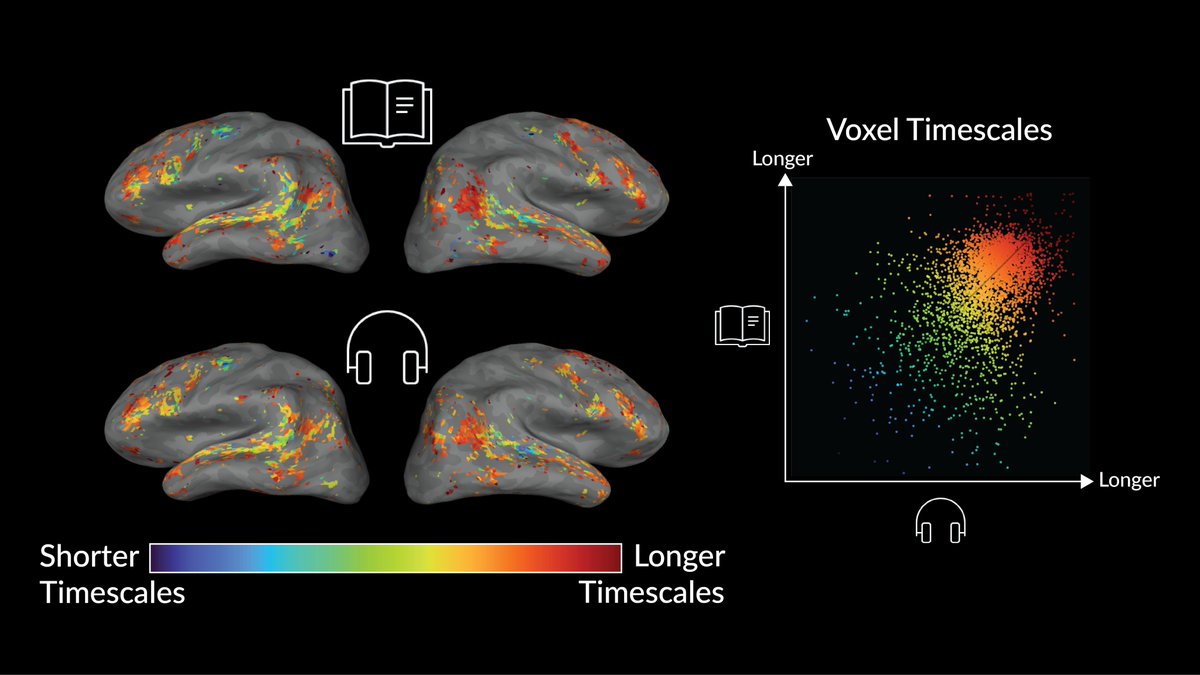

Do brain representations of language depend on whether the inputs are pixels or sounds?

Our Communications Biology paper studies this question from the perspective of language timescales. We find that representations are highly similar between modalities! rdcu.be/dACh5

1/8

From your cell phone to your TV, images and videos are now captured in 4K resolution or better. Vision methods, however, opt to downsize or crop them, losing information. We introduce xT, our framework to model large images end-to-end on contemporary GPUs! ai-climate.berkeley.edu/xt-website/

Achieving bimanual dexterity with RL + Sim2Real!

toruowo.github.io/bimanual-twist/

TLDR - We train two robot hands to twist bottle lids using deep RL followed by sim-to-real. A single policy trained with simple simulated bottles can generalize to drastically different real-world objects.

Congratulations to Berkeley AI Research member Soufiane Hayou on receiving the Gradient AI Research Fellowship!

gradient.ai/blog/soufiane-…

![Jiayi Pan (@pan_jiayipan) on Twitter photo 2024-04-10 16:07:22 New paper from @Berkeley_AI on Autonomous Evaluation and Refinement of Digital Agents! We show that VLM/LLM-based evaluators can significantly improve the performance of agents for web browsing and device control, advancing sotas by 29% to 75%. arxiv.org/abs/2404.06474 [🧵] New paper from @Berkeley_AI on Autonomous Evaluation and Refinement of Digital Agents! We show that VLM/LLM-based evaluators can significantly improve the performance of agents for web browsing and device control, advancing sotas by 29% to 75%. arxiv.org/abs/2404.06474 [🧵]](https://pbs.twimg.com/media/GK0M2S8bEAAduvw.jpg)