Davis Blalock

@davisblalock

Research scientist @MosaicML. @MIT PhD. I retweet high-quality threads about machine learning papers. Weekly paper summaries newsletter: https://t.co/xX7NIpsIVZ

ID:805547773944889344

04-12-2016 23:02:10

1,2K Tweets

11,7K Followers

158 Following

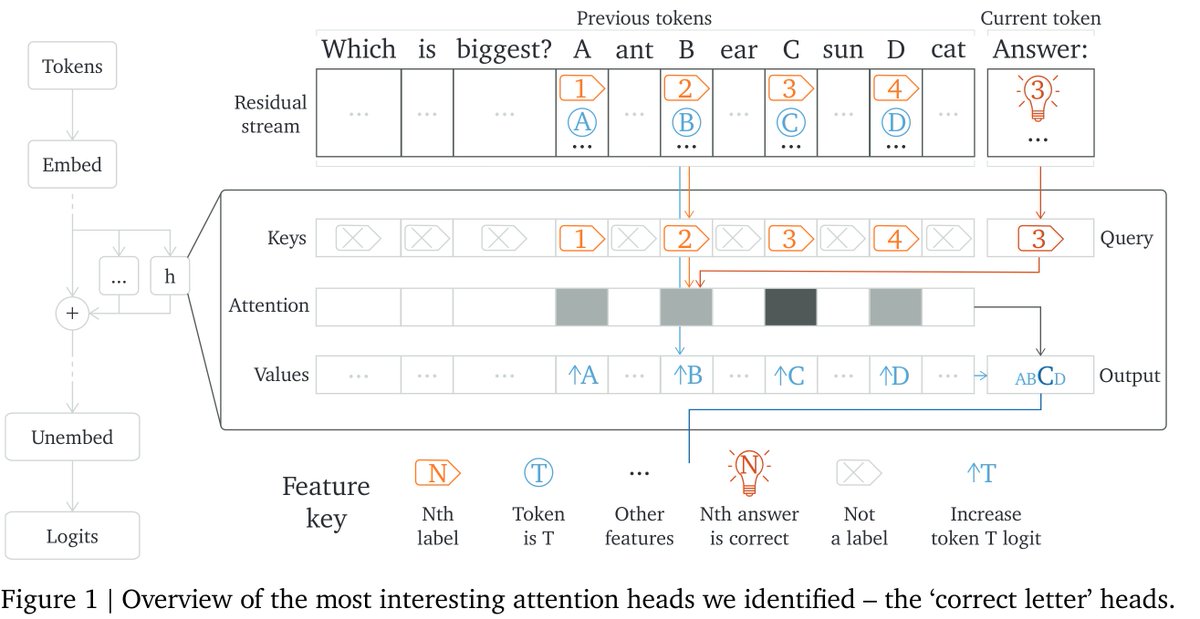

Mech interp has been very successful in tiny models, but does it scale? …Kinda!

Our new Google DeepMind paper studies how Chinchilla70B can do multiple-choice Qs, focusing on picking the correct letter. Small model techniques mostly work but it's messy!🧵arxiv.org/abs/2307.09458

📢 Today, we're thrilled to announce that @Databricks has completed its acquisition of MosaicML. Our teams share a common goal to make #GenerativeAI accessible for all. We're excited to change the world together!

Read the press release and stay tuned for more updates:…

Demystifying GPT-4: The engineering tradeoffs that led OpenAI to their architecture.

GPT-4 model architecture, training infrastructure, inference infrastructure, parameter count, training dataset composition, token count, layer count, parallelism, vision

semianalysis.com/p/gpt-4-archit…

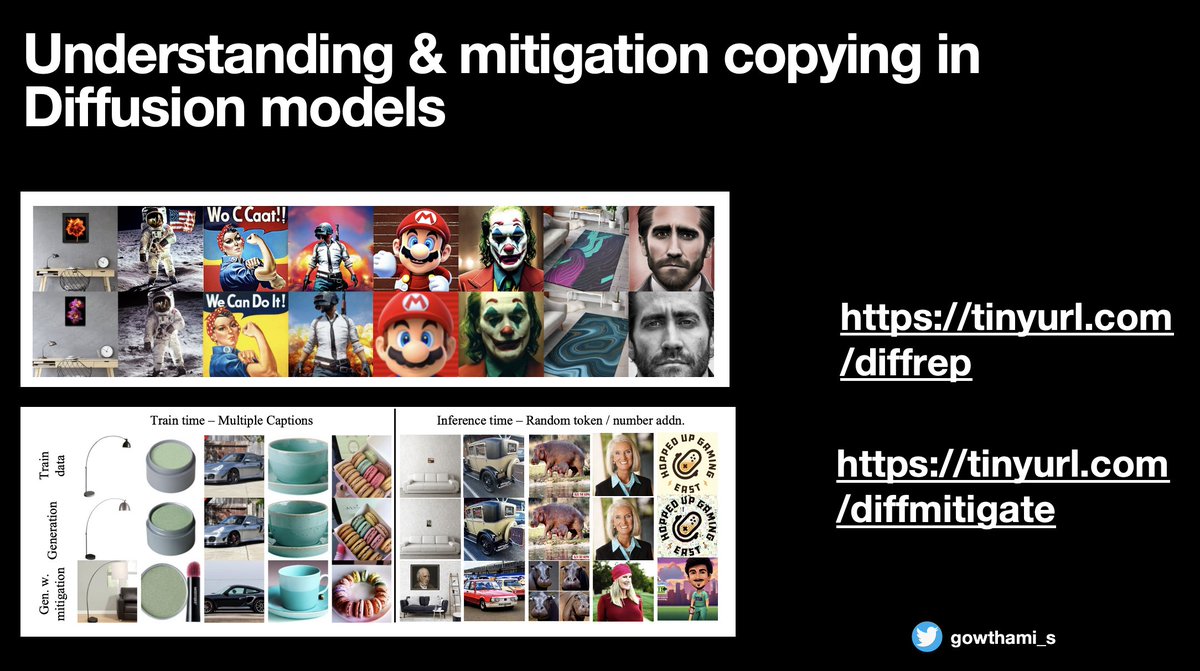

📃🚨 Does your diffusion model copy from the training data? How to find such behavior? Why does it happen? Can we somehow mitigate it?

A summary of recent work on understanding training data replication in recent T2I #diffusion models. A long 🧶

#machinelearning #aigeneration