Daphne Cornelisse

@daphne_cor

PhD student at @nyuniversity

ID: 903960922703659008

http://www.daphne-cornelisse.com 02-09-2017 12:40:53

395 Tweet

849 Followers

457 Following

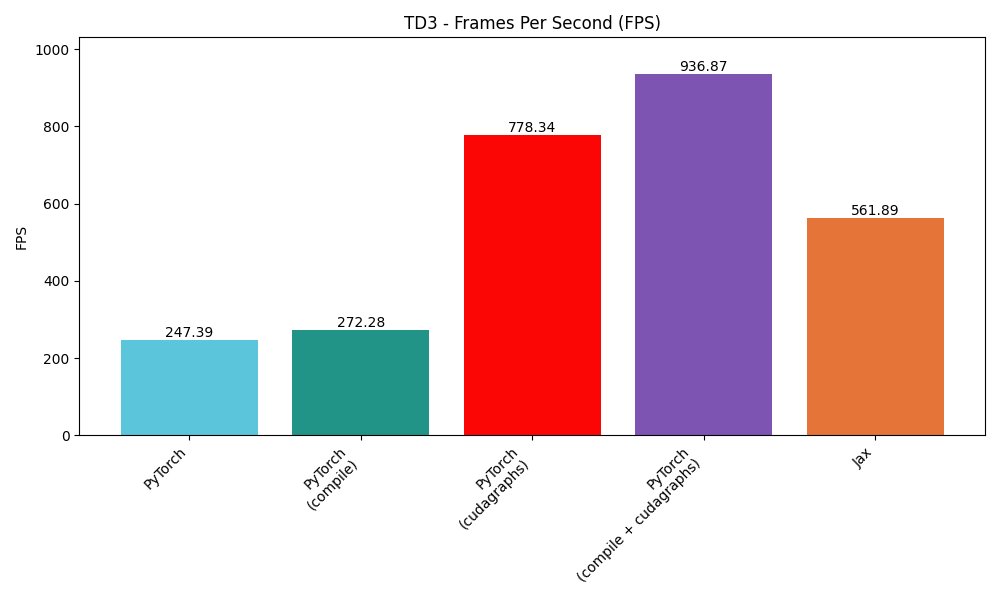

Live now on X/Twitter/YT adding Day 1 support for Eugene Vinitsky 🍒 Daphne Cornelisse GPU drive environment to PufferLib! Can we make it even faster?

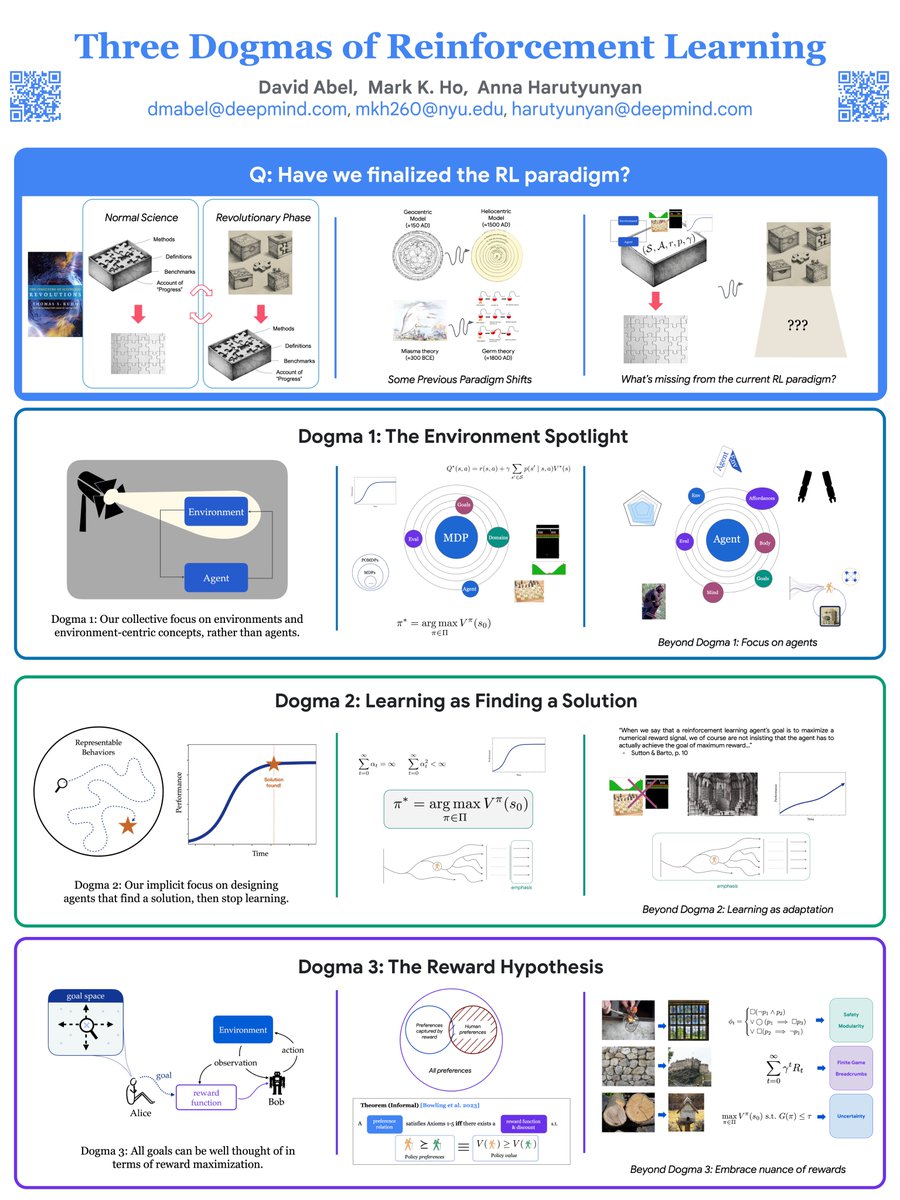

Excited to to present Three Dogmas of RL on the last day of RL_Conference! > Talk in Room 168, 11.30am-12.30pm > Poster in Room 162, 12.30pm-2.30pm Anna Harutyunyan and I will be around, looking forward to your questions + challenges! 😄