Francisco Massa

@fvsmassa

Research Engineer at Facebook AI Research working on PyTorch.

ID:832596127962775552

17-02-2017 14:22:40

314 Tweets

3,4K Followers

11 Following

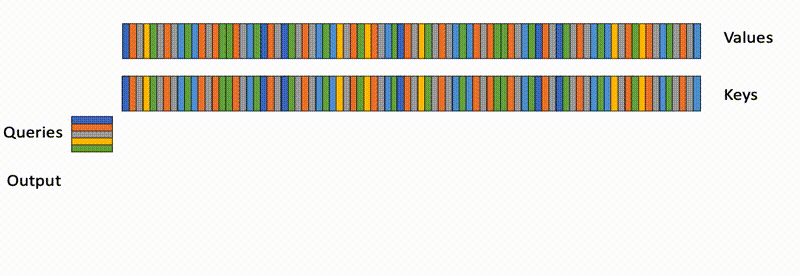

Announcing Flash-Decoding, to make long-context LLM inference up to 8x faster! Great collab with Daniel Haziza, Francisco Massa and Grigory Sizov.

Main idea: load the KV cache in parallel as fast as possible, then separately rescale to combine the results.

1/7

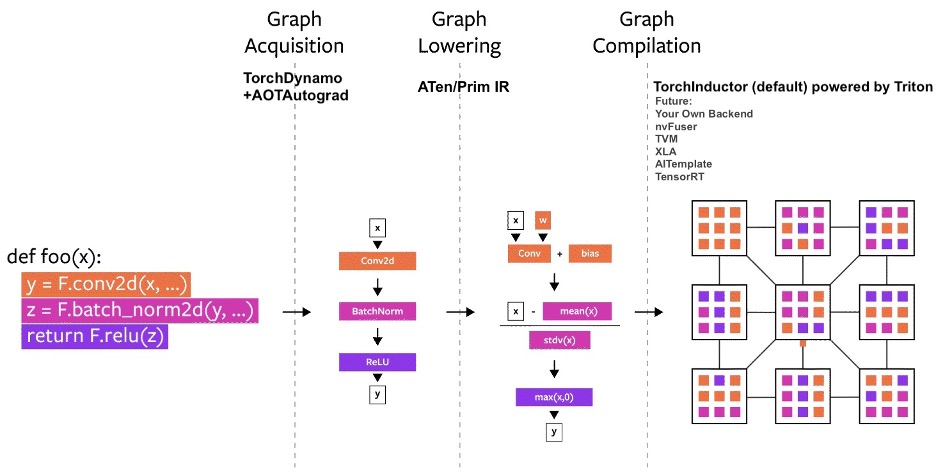

We just introduced PyTorch 2.0 at the #PyTorchConference , introducing torch.compile!

Available in the nightlies today, stable release Early March 2023.

Read the full post: bit.ly/3VNysOA

🧵below!

1/5

Glad to present our work with Francisco Massa and Alexandre Défossez « Hybrid Transformers for Music Source Separation » done at @MetaAI.

We achieve 9.20 dB of SDR on the MUSDB18 test set.

- paper: arxiv.org/abs/2211.08553

- code: github.com/facebookresear…

- audio: ai.honu.io/papers/htdemuc…

1/5

[🧠👨💻 ML Coding Series] Continuing on with the ML coding series! This might be the most thorough ML paper explanation in history - 1h and 45 minutes! 😅

Facebook (now @MetaAI) DETR explained

YT: youtu.be/xkuoZ50gK4Q

Nicolas Carion Francisco Massa Gabriel Synnaeve Alexander Kirillov Sergey Zagoruyko

1/

![Aleksa Gordić 🍿🤖 (@gordic_aleksa) on Twitter photo 2022-06-30 08:32:18 [🧠👨💻 ML Coding Series] Continuing on with the ML coding series! This might be the most thorough ML paper explanation in history - 1h and 45 minutes! 😅 Facebook (now @MetaAI) DETR explained YT: youtu.be/xkuoZ50gK4Q @alcinos26 @fvsmassa @syhw @kirillov_a_n @szagoruyko5 1/ [🧠👨💻 ML Coding Series] Continuing on with the ML coding series! This might be the most thorough ML paper explanation in history - 1h and 45 minutes! 😅 Facebook (now @MetaAI) DETR explained YT: youtu.be/xkuoZ50gK4Q @alcinos26 @fvsmassa @syhw @kirillov_a_n @szagoruyko5 1/](https://pbs.twimg.com/media/FWfLmJrX0AM6j7Y.jpg)

The latest release of TorchVision has a feature extraction utility. Super handy - check it out. Thanks Francisco Massa and team for helping put this together.

Looking forward to using this new functionality to allow more flexible feature extraction in timm -- especially for vision transformers and mlp models. Thanks Alexander Soare and Francisco Massa