icpp_pro

@icpp_pro

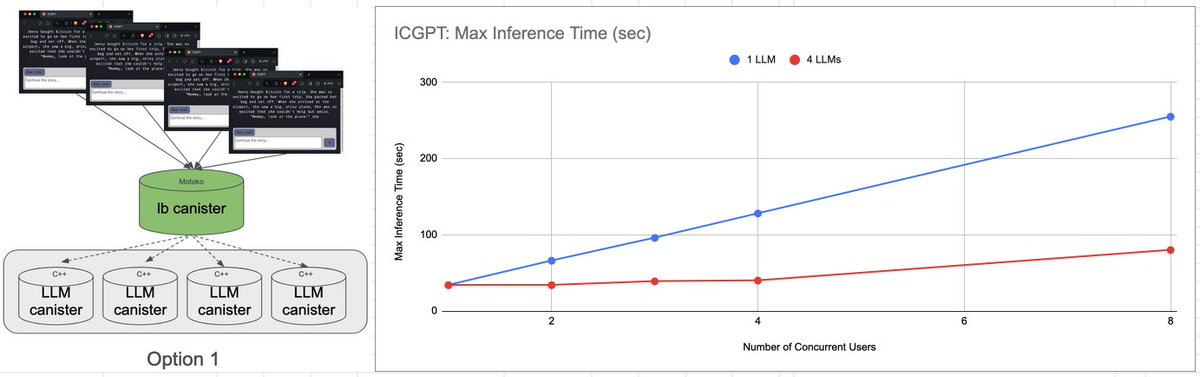

I write C/C++ for the Internet Computer.

ID:1659672034320830464

https://icgpt.icpp.world/ 19-05-2023 21:27:27

235 Tweets

378 Followers

243 Following

Congrats also to DGDG (dgastonia).

This was the first time I used it, to buy my medallion, and the process was super smooth. Well done!