Pang Wei Koh

@pangweikoh

Assistant professor at @uwcse. Formerly @StanfordAILab @GoogleAI @Coursera. 🇸🇬

ID: 1273467805283659777

https://koh.pw 18-06-2020 04:09:26

279 Tweet

3,3K Followers

880 Following

.Raj Movva, Pang Wei Koh, and I write for Nature Medicine on using unlabeled data to improve generalization + fairness of medical AI models: nature.com/articles/s4159… We highlight two nice recent papers illustrating this - nature.com/articles/s4159…, nature.com/articles/s4159….

Instead of scaling pretraining data, can we scale the amount of data available at inference instead? Scaling RAG datastores to 1.4T tokens (on an academic budget) gives us better training-compute-optimal curves for LM & downstream performance. Check out Rulin Shao's work below!

After 7 months on the job market, I am happy to announce: - I joined Ai2 - Professor at Carnegie Mellon University from Fall 2025 - New bitsandbytes maintainer Titus von Koeller My main focus will be to strengthen open-source for real-world problems and bring the best AI to laptops 🧵

📣 After graduating from @UWCSE, I am joining UC Berkeley as an Assistant Professor (affiliated w Berkeley AI Research BerkeleyNLP) and Ai2 as a Research Scientist. I'm looking forward to tackling exciting challenges in NLP & generative AI together with new colleagues! 🐻✨

Pre #acl2024 talks, posters, and food 🥘🍝🍲🍛🥙 We Sunipa Dev Kai-Wei Chang Tristan Naumann Isabelle Augenstein Scott Hale Jose Camacho-Collados Pang Wei Koh Minjoon Seo JinYeong Bak and Yoon Kim, (with Mohit Bansal and Violet Peng joining online) had a blast thanks to the amazing students

My lab will move to NYU Data Science and NYU Courant this Fall! I’m excited to connect with amazing researchers at CILVR and larger ML/NLP community in NYC. I will be recruiting students this cycle at NYU. Happy to be back to the city 🗽on the east coast as well. I had a

Check out JPEG-LM, a fun idea led by Xiaochuang Han -- we generate images simply by training an LM on raw JPEG bytes and show that it outperforms much more complicated VQ models, especially on rare inputs.

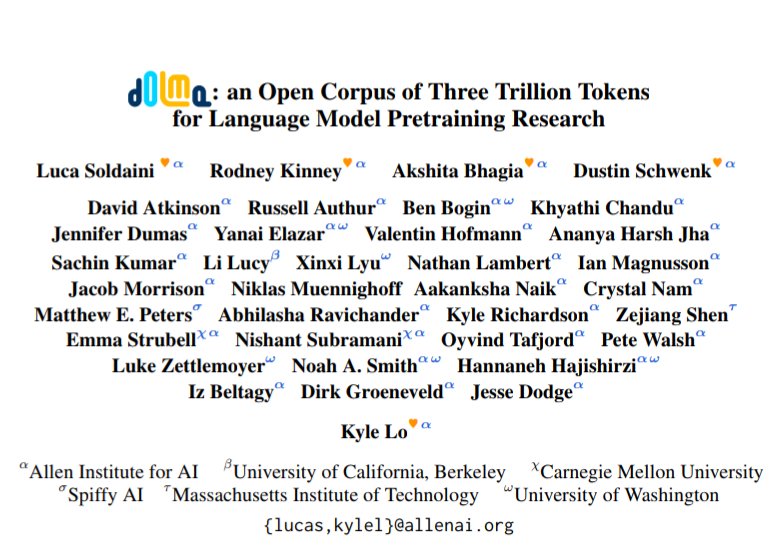

OLMoE, our fully open mixture-of-experts LLM led by Niklas Muennighoff, is out! Check out the paper for details on our design decisions: expert granularity, routing, upcycling, load balancing, etc.