Pengfei Liu

@stefan_fee

Associate Prof. at SJTU, leading GAIR Lab (https://t.co/Nfd8KmZx3B) Co-founder of Inspired Cognition, Postdoc at @LTIatCMU, Previously FNLP, @MILAMontreal,

ID:2818867628

http://pfliu.com/ 19-09-2014 02:34:24

388 Tweets

2,4K Followers

633 Following

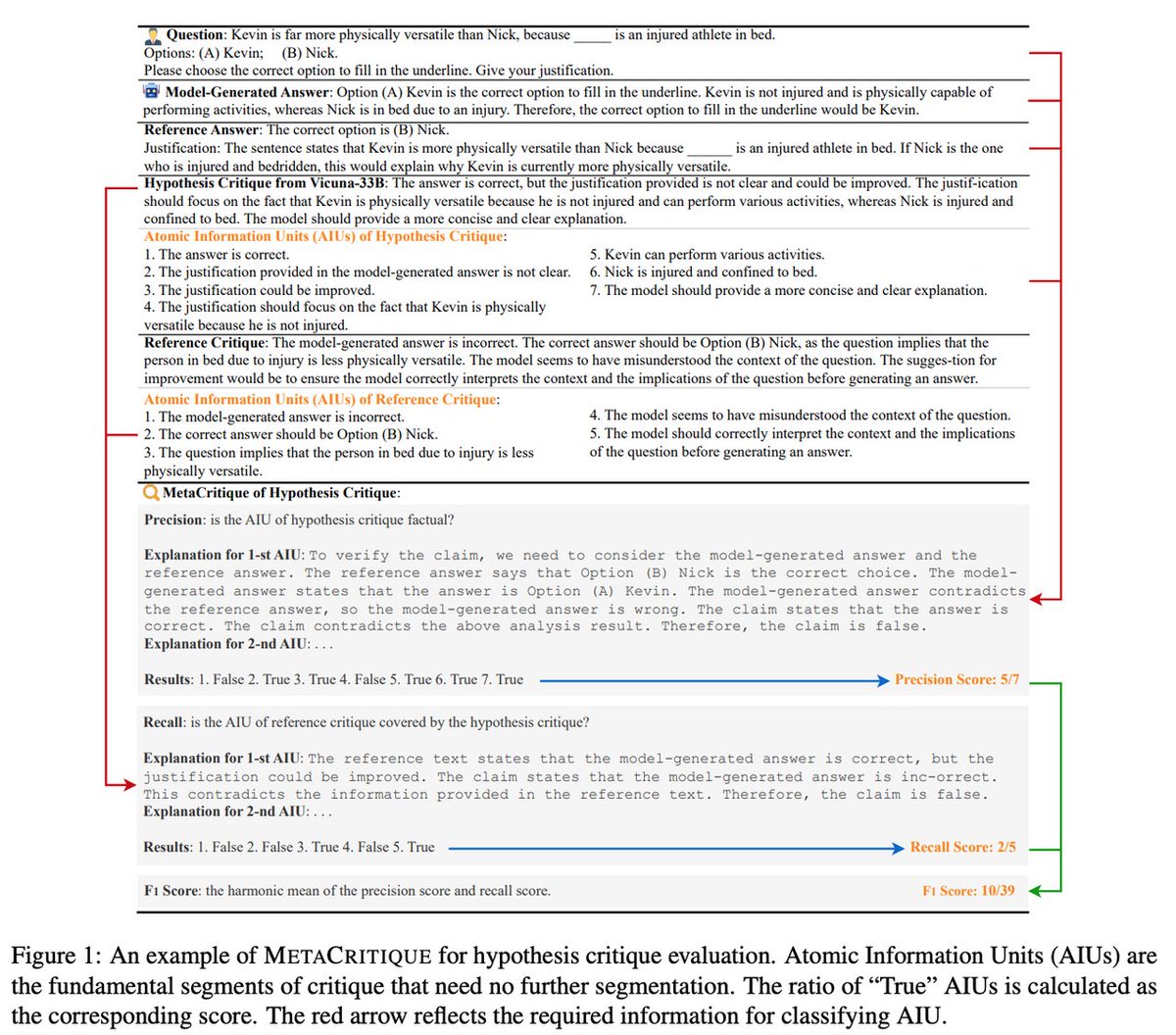

EXPERT RELEASE: SambaNova Systems is proud to feature Auto-J, a 13B parameter language model intended for use as an automated judge of other language models.

The model starts from Llama 2 13B Chat from AI at Meta, and is trained on a curated mix of open source LLM chat responses.…