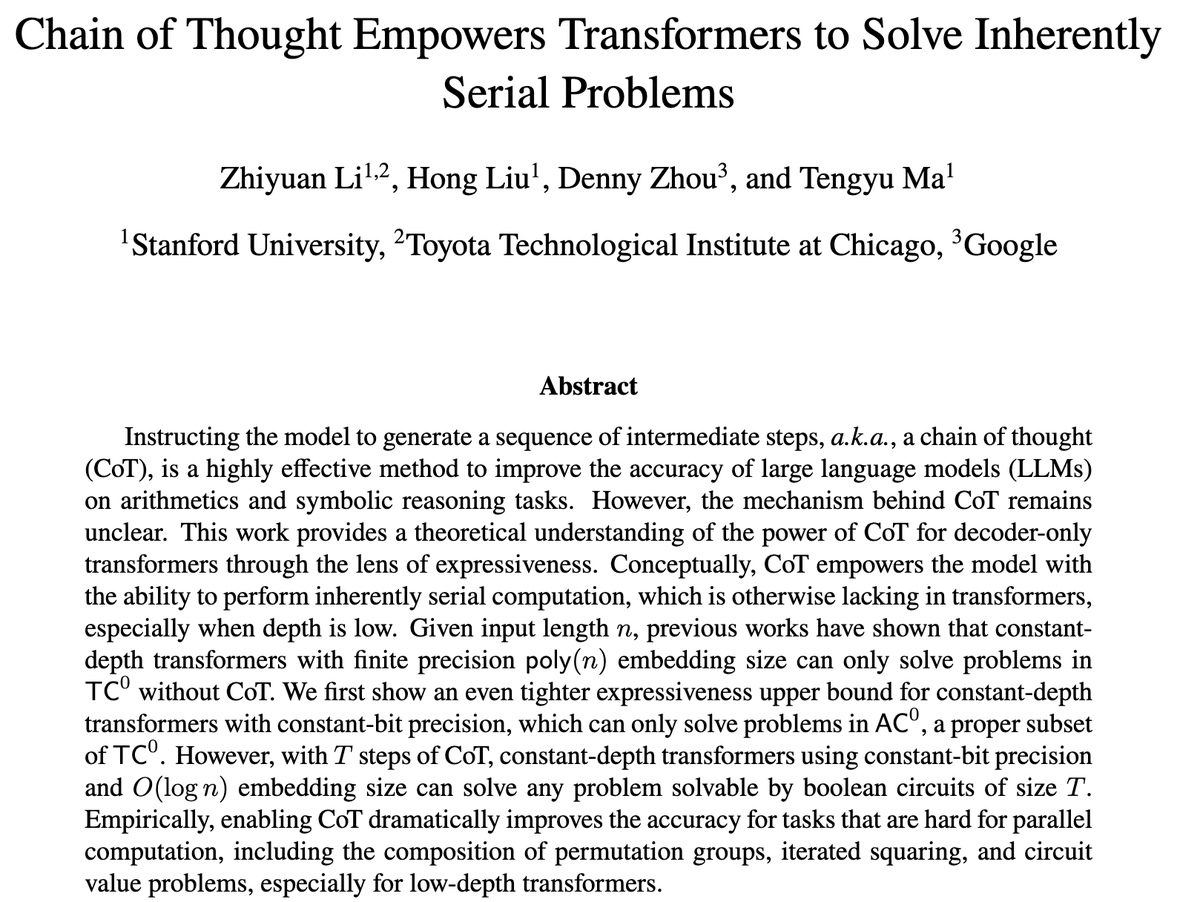

Tengyu Ma

@tengyuma

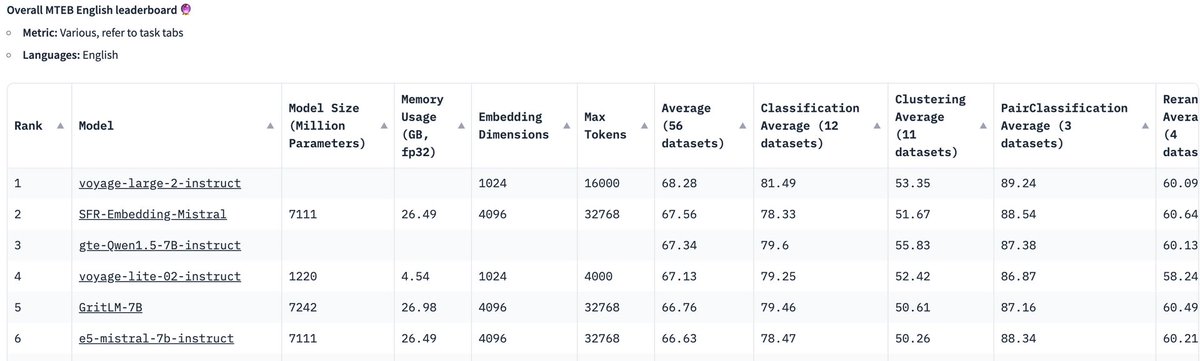

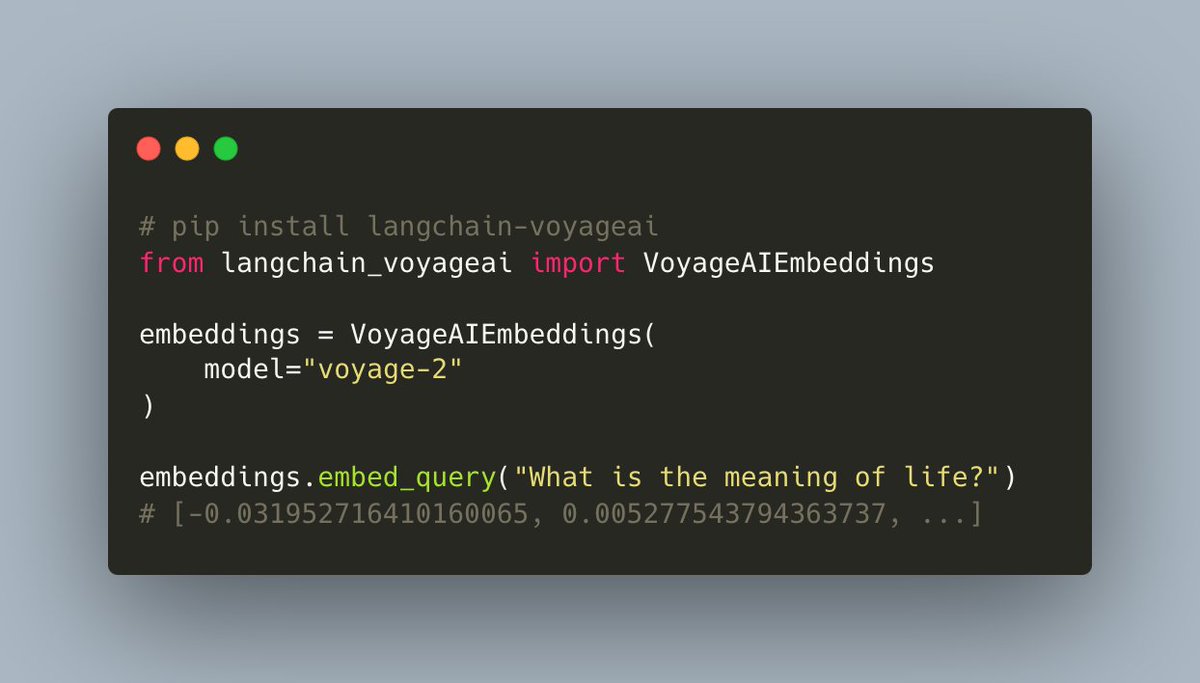

Assistant professor at Stanford; Co-founder of Voyage AI (https://t.co/wpIITHLgF0) ;

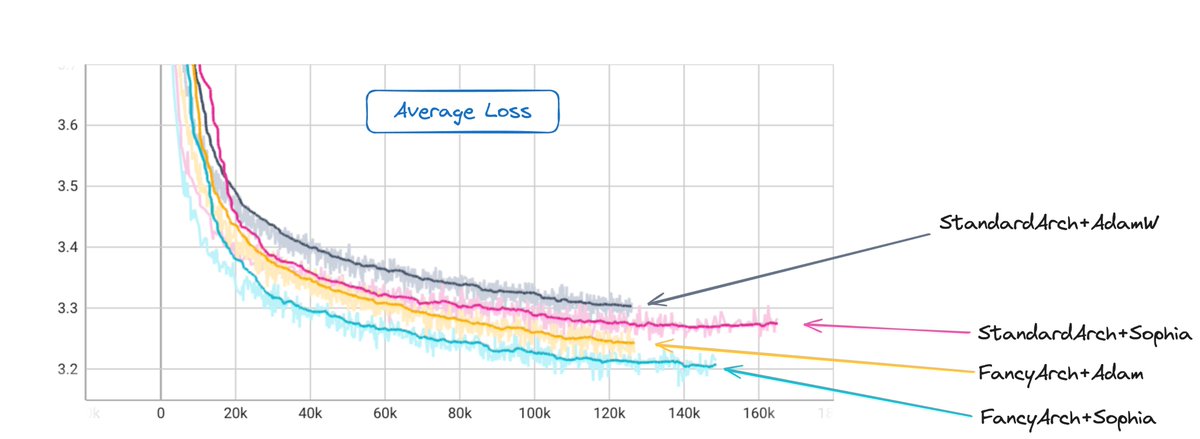

Working on ML, DL, RL, LLMs, and their theory.

ID:314395154

http://ai.stanford.edu/~tengyuma 10-06-2011 05:40:55

411 Tweets

26,1K Followers

515 Following