Language Technologies Institute | @CarnegieMellon

@LTIatCMU

The Language Technologies Institute in Carnegie Mellon University's @SCSatCMU

ID:4901650162

http://lti.cs.cmu.edu 12-02-2016 15:12:09

1,4K Tweets

9,2K Followers

233 Following

Multitask learning (MTL) is known to enhance model performance on average, yet its effect on group fairness is under-explored. In our recent #TMLR2024 paper with Lucio Dery Jnr Mwinm Amrith Setlur Aditi Raghunathan Ameet Talwalkar & Graham Neubig, we address this gap!

openreview.net/forum?id=sPlhA…

(1/10)

Excited to share our work on FlexCap! It can provide visual captions at varying level of granularity for any region in the image.

Website: flex-cap.github.io

See 🧵by Debidatta Dwibedi for more details!

It's likely that you were reading linear probing results wrong! 🤔 In our new paper for #ICASSP2024 , we analyze probing via information theory to provide two pro tips: (1) use loss, not accuracy, and (2) stop worry about the probe size. arxiv.org/abs/2312.10019 (1/n)

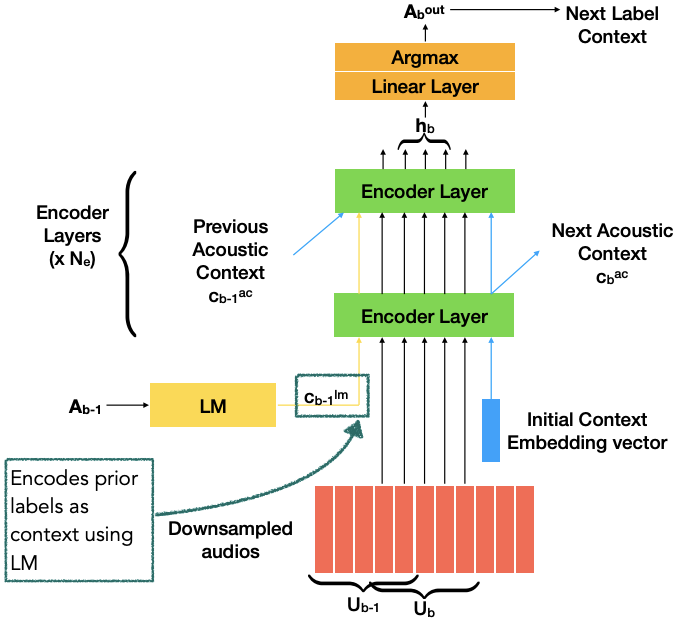

New #ICASSP2024 📜, we build streaming semi-autoregressive ASR that performs greedy NAR decoding within a block but keeps the AR property across blocks by encoding the labels of previous blocks to achieve strong performance at very low latency!

📜: arxiv.org/pdf/2309.10926…